Introduction to GCP Cloud Run Container Services

As the digital landscape continues to evolve at an unprecedented pace, businesses are increasingly relying on cloud computing services to stay ahead of the curve. Google Cloud Platform (GCP) stands at the forefront of this revolution, offering a comprehensive suite of services designed to meet the diverse needs of modern enterprises. One such service that has garnered significant attention is Google Cloud Run, a fully managed compute platform that enables developers to deploy and run containerized applications effortlessly.

In this in-depth exploration, we will delve into the intricacies of Google Cloud Run Container Services, understanding its architecture, features, benefits, and real-world applications. By the end of this article, you will have a comprehensive understanding of how Cloud Run empowers developers to build scalable, resilient, and efficient applications in the cloud.

Understanding Containerization and Its Significance

Before delving into the realm of Google Cloud Run, it is imperative to comprehend the concept of containerization and recognize its profound impact on modern software development practices.

Containers, at their core, are lightweight, standalone, and executable software packages engineered to encapsulate an application along with its dependencies. This encapsulation ensures that an application runs consistently across various environments, irrespective of differences in underlying infrastructure. Unlike traditional virtual machines, containers share the host operating system’s kernel, making them more resource-efficient, faster to deploy, and inherently portable.

At the forefront of the containerization movement stands Docker, a pioneering platform that has significantly shaped and popularized this technology. Docker provides a comprehensive set of tools and a standardized format for packaging applications into containers. This standardization has fostered a shift in the way software is developed, tested, and deployed, contributing to increased agility and scalability in the development lifecycle.

Containerization addresses challenges related to software deployment by promoting a modular and isolated approach. Each container houses a specific application and its dependencies, minimizing conflicts between applications and streamlining the deployment process. This isolation also enhances security, as potential vulnerabilities are contained within the boundaries of individual containers.

The efficiency of containers lies in their ability to abstract away the underlying infrastructure details. Developers can focus on creating applications without worrying about the intricacies of the target environment, be it in a local development machine, a staging server, or a production environment. This abstraction simplifies the deployment pipeline, facilitates collaboration across development and operations teams, and accelerates the delivery of software.

Containerization, exemplified by technologies like Docker, has revolutionized software development by introducing a standardized, portable, and efficient packaging mechanism. As we explore Google Cloud Run, this foundational understanding of containerization will prove instrumental in harnessing the full potential of containerized applications on a cloud-native platform.

Benefits of Containerization

- Portability: One of the primary advantages of containerization is the inherent portability it provides. Containers encapsulate an application and its dependencies, ensuring a consistent environment across different stages of the development lifecycle, from local development environments to production. This portability simplifies the deployment process, reducing the chances of discrepancies between various environments and making it easier to migrate applications across different infrastructure.

- Isolation: Containerization promotes a high degree of isolation between applications and their dependencies. Each container runs as an independent unit, encapsulating all the necessary components. This isolation prevents conflicts between different applications and their respective libraries or dependencies. As a result, developers can confidently deploy applications knowing that they won’t interfere with each other, enhancing overall system stability.

- Efficiency: Containers are more lightweight than traditional virtual machines, consuming fewer resources while maintaining efficient performance. This efficiency is achieved through containerization’s ability to share the host operating system’s kernel among containers, reducing the overhead associated with running multiple virtual machines. This streamlined resource consumption results in faster deployment times, improved scalability, and more efficient resource utilization across the entire infrastructure.

- Flexibility: Containerization provides developers with the flexibility to choose any programming language, framework, or tool for their applications. Containers abstract away the underlying infrastructure, allowing developers to focus on building and shipping applications without worrying about compatibility issues. This flexibility is crucial in modern development environments where diverse technologies are often employed, enabling teams to use the most suitable tools for their specific needs without being constrained by the underlying infrastructure.

Containerization offers a range of benefits, including enhanced portability, isolation, efficiency, and flexibility. These advantages contribute to more streamlined development and deployment processes, making containerization a popular choice for modern software development practices.

Google Cloud Run: A Brief Overview

Google Cloud Run, introduced in April 2019, represents a revolutionary step in serverless computing by elevating containerization to new heights. The platform offers a seamless environment for deploying and managing containerized applications, liberating developers from the complexities of infrastructure management and allowing them to concentrate on crafting scalable and efficient solutions.

Key Features of Google Cloud Run

Fully Managed: One of the standout features of Google Cloud Run is its status as a fully managed platform. It assumes responsibility for infrastructure provisioning, scaling, and maintenance, freeing developers from the intricacies of server management. This hands-off approach empowers developers to prioritize writing code without being encumbered by operational concerns.

Serverless: Embracing the serverless paradigm, Cloud Run enables developers to execute applications without delving into server-specific tasks. Users are billed solely for the actual compute resources consumed during the processing of requests, aligning with the serverless cost model and enhancing cost-effectiveness.

Containerized Deployments: Google Cloud Run supports containerized applications built using Docker. This compatibility extends its utility across a diverse spectrum of programming languages, frameworks, and libraries, offering flexibility to developers. Leveraging containers streamlines the deployment process and enhances consistency across various environments.

Auto-scaling: An intelligent auto-scaling mechanism distinguishes Cloud Run. It dynamically adjusts the number of container instances based on the incoming request traffic. This feature ensures optimal performance and efficient resource utilization, scaling up or down as needed to meet application demands.

Global Reach: With its multi-region deployment options, Cloud Run empowers developers to strategically position their applications geographically. This capability minimizes latency by deploying applications close to end-users, thereby significantly improving the overall user experience. This global reach is a crucial aspect for applications catering to diverse user bases around the world.

Getting Started with Google Cloud Run

To embark on the journey of leveraging Google Cloud Run’s capabilities, developers must follow a systematic process to set up and deploy their applications.

Prerequisites:

Before delving into Cloud Run, developers need to ensure they have the necessary prerequisites in place. This includes having a Google Cloud Platform (GCP) account, installing the Google Cloud SDK, and enabling the Cloud Run API. These initial steps establish the foundational infrastructure required for deploying applications on the Cloud Run platform.

Containerizing the Application:

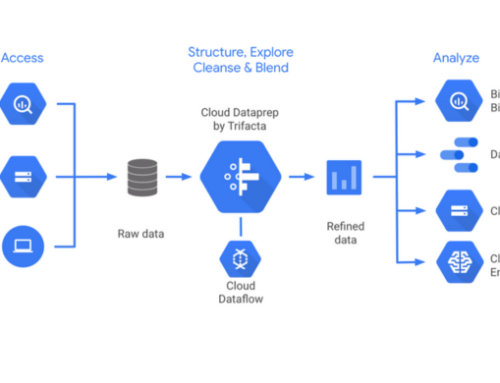

To deploy an application on Cloud Run, developers must containerize their applications using Docker. The Dockerfile plays a crucial role in this process as it outlines the application’s dependencies and runtime environment. Once the containerization is complete, developers can push their containerized applications to Google Container Registry, making them ready for deployment on Cloud Run.

Deploying to Cloud Run:

The deployment process to Cloud Run is designed to be user-friendly and efficient. Developers have the flexibility to use either the gcloud command-line tool or the Cloud Console to initiate the deployment of their containerized applications. Cloud Run streamlines this process and automatically assigns a unique URL endpoint to the deployed application, making it accessible over the internet.

Testing and Monitoring:

Post-deployment, developers have the opportunity to thoroughly test their applications by sending requests to the assigned URL endpoint. This step ensures that the deployed application functions as expected and can handle real-world scenarios. Additionally, Cloud Run provides robust testing capabilities and integrates seamlessly with Google Cloud’s monitoring and logging tools. Developers can leverage these tools to monitor the performance of their applications, diagnose any issues that may arise, and optimize their applications for enhanced efficiency.

In essence, the process of getting started with Google Cloud Run involves a systematic progression from setting up the necessary prerequisites to deploying, testing, and monitoring applications, empowering developers to harness the full potential of this powerful platform.

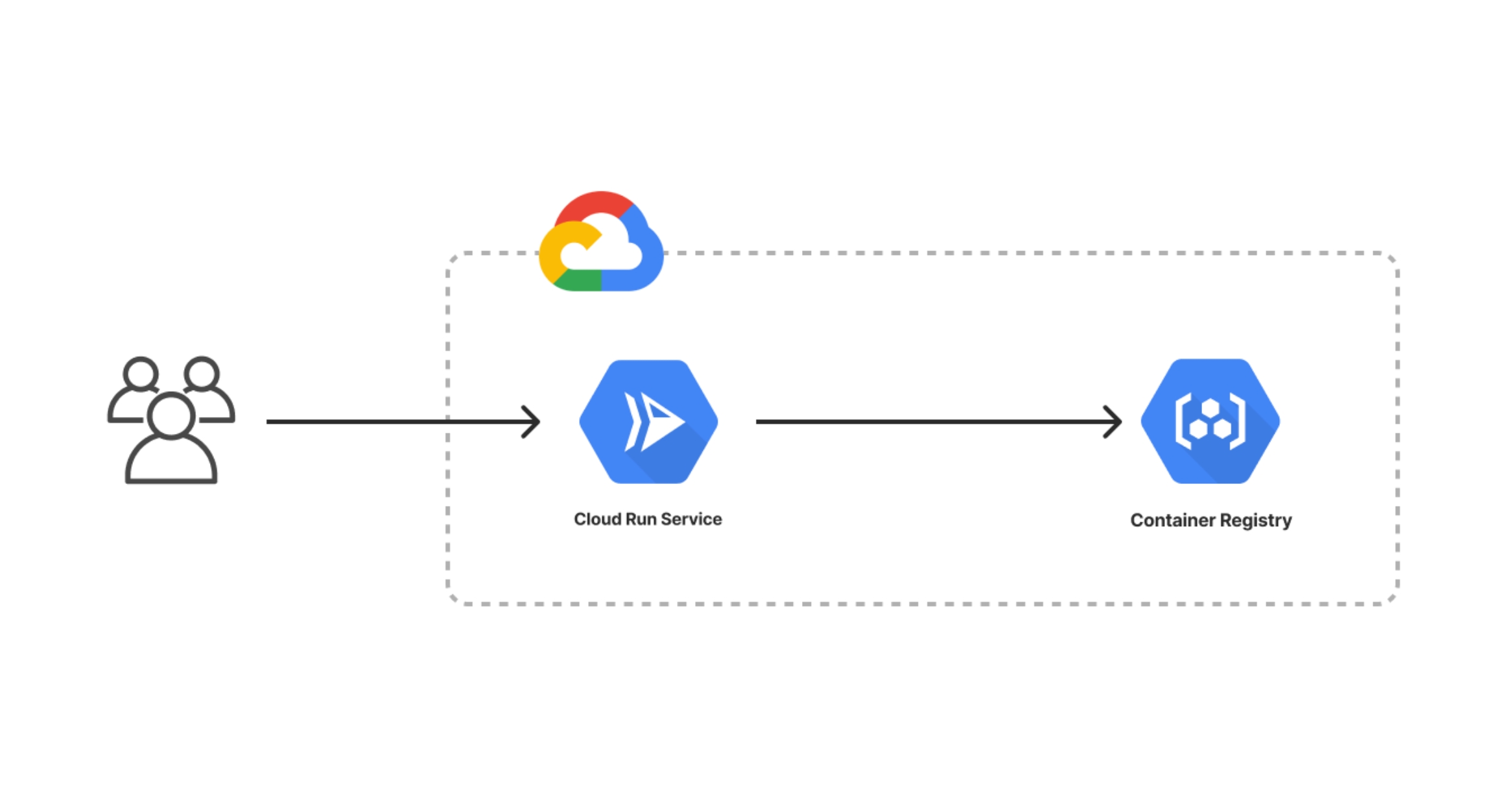

Architectural Deep Dive: Understanding Google Cloud Run

Google Cloud Run’s architecture provides a comprehensive framework for deploying and managing containerized applications, emphasizing scalability, efficiency, and simplicity. Let’s delve into the key components of Cloud Run’s architecture:

1. Container Instances:

At the heart of Google Cloud Run are container instances. These instances serve as the fundamental units for running applications. They are designed to be lightweight, stateless, and ephemeral, enabling swift scaling in response to demand fluctuations. Each container instance is dedicated to running a single containerized application, fostering isolation and efficient resource utilization.

2. Deployment:

Developers leverage the gcloud command-line tool or the Cloud Console to deploy their containerized applications to Cloud Run. During the deployment process, Cloud Run automatically generates a unique URL endpoint for each application, facilitating easy access and interaction.

3. Invocations and Scaling:

Cloud Run’s automatic scaling mechanism is a standout feature. When a request is made to the assigned URL endpoint, Cloud Run dynamically adjusts the number of container instances to efficiently handle the incoming traffic. This dynamic scaling ensures optimal resource utilization, providing a seamless and responsive user experience.

4. Request Processing:

Cloud Run follows a pay-as-you-go model, charging users based on the actual number of requests and the resources consumed during each invocation. This serverless approach eliminates the need for continuous infrastructure provisioning and aligns costs closely with actual usage, making it a cost-effective solution for varying workloads.

5. Container Image Building:

Integration with Google Container Registry is a crucial aspect of Cloud Run. This integration enables developers to store and manage container images efficiently. During deployment, Cloud Run automatically builds the necessary container image and seamlessly deploys it to the platform. This streamlined process simplifies the workflow for developers and ensures consistency in the deployment pipeline.

Google Cloud Run’s architecture provides a robust foundation for deploying and managing containerized applications with an emphasis on scalability, cost-effectiveness, and developer-friendly workflows. The combination of container instances, dynamic scaling, pay-as-you-go pricing, and seamless container image building makes Cloud Run a versatile and powerful solution for a wide range of applications and use cases.

Real-world Applications and Use Cases of Google Cloud Run

Google Cloud Run has witnessed widespread adoption across diverse industries due to its versatility and scalability. This serverless compute platform is particularly well-suited for various real-world scenarios, showcasing its efficiency and applicability.

Microservices Architecture:

One of the key strengths of Cloud Run lies in its compatibility with microservices-based architectures. Developers can deploy individual microservices as containerized applications, allowing for independent scaling, updates, and maintenance. This flexibility is crucial in modern software development, enabling teams to manage and scale components independently, leading to more efficient and agile development processes.

Web Applications:

Cloud Run emerges as an excellent choice for hosting web applications, especially those characterized by varying traffic patterns. The platform’s auto-scaling feature ensures that resources are allocated dynamically based on user demand. This adaptability is particularly beneficial for web applications that experience fluctuating levels of user engagement, providing a cost-effective and responsive hosting solution.

RESTful APIs:

Developers can leverage Cloud Run for deploying RESTful APIs, taking advantage of its rapid scaling capabilities in response to incoming requests. This feature is especially valuable for APIs with unpredictable usage patterns. Cloud Run’s ability to efficiently scale up or down ensures that APIs remain responsive even during spikes in demand, contributing to a seamless user experience.

Background Jobs and Batch Processing:

Cloud Run extends its functionality beyond serving HTTP requests, making it a versatile solution for running background jobs and handling batch processing tasks. This capability adds to the platform’s adaptability, offering developers a comprehensive solution for a wide range of workload types. Whether it’s processing large datasets or executing periodic tasks, Cloud Run provides a reliable and scalable environment for background jobs and batch processing, contributing to enhanced operational efficiency.

The real-world applications and use cases of Google Cloud Run underscore its suitability for a variety of scenarios, ranging from microservices architecture to web applications, RESTful APIs, and background jobs. Its flexibility, scalability, and serverless nature make Cloud Run a valuable tool for developers and businesses seeking efficient and adaptable solutions in the dynamic landscape of cloud computing.

Advantages of Google Cloud Run

Simplified Operations:

Google Cloud Run streamlines operations for developers by abstracting away much of the operational complexity associated with deploying and managing containerized applications. This abstraction allows developers to focus primarily on writing code and building features without being burdened by the intricacies of infrastructure management. This simplicity enhances productivity and accelerates the development process.

Cost-effectiveness:

One of the key advantages of Google Cloud Run lies in its cost-effectiveness. The serverless nature of Cloud Run means that users are billed only for the compute resources consumed during request processing. This eliminates the need for continuous infrastructure provisioning, providing a more economical solution for organizations. Users can scale their applications without incurring unnecessary costs during periods of low traffic.

Auto-scaling:

Cloud Run’s auto-scaling capabilities contribute to its efficiency in handling varying levels of traffic. The platform dynamically adjusts the number of container instances based on demand. This ensures optimal performance even during spikes in traffic, allowing applications to scale seamlessly. Auto-scaling not only enhances performance but also optimizes resource utilization, making it a valuable feature for applications with unpredictable workloads.

Global Reach:

With multi-region deployment options, Google Cloud Run enables developers to deploy applications closer to their users. This global reach reduces latency and significantly improves the overall user experience. The ability to deploy applications in various regions makes Cloud Run an ideal choice for organizations with globally distributed user bases, enhancing accessibility and responsiveness on a global scale.

The advantages of Google Cloud Run extend from simplified operations and cost-effectiveness to auto-scaling capabilities and a global deployment reach. These features collectively make Cloud Run an appealing platform for developers and organizations seeking a streamlined, efficient, and globally accessible solution for deploying and managing containerized applications.

Challenges and Considerations in Google Cloud Run

Google Cloud Run presents a compelling solution for deploying applications in a serverless environment, but users need to be cognizant of certain challenges and considerations inherent in this platform.

1. Cold Starts:

One notable challenge with Google Cloud Run is the phenomenon of “cold starts.” This refers to the delay experienced during the first request to a new container instance. When a new container is initiated to handle incoming traffic, there is an initialization process that can result in a slight latency. This aspect is crucial for applications that require near-instantaneous responses and demands developers to consider strategies for minimizing the impact of cold starts.

2. Statelessness:

Cloud Run promotes a stateless application design, aligning with modern best practices for scalable and resilient architectures. However, this approach may necessitate developers to reevaluate and potentially refactor stateful components in their applications. Maintaining statelessness contributes to the platform’s ability to scale and ensures a more robust and reliable deployment.

3. Maximum Request Timeout:

A key consideration in Cloud Run is the imposition of a maximum request timeout of 15 minutes. This constraint can affect applications with long-running tasks that exceed this timeframe. Developers must break down such tasks into smaller, manageable units to fit within the stipulated time limits, ensuring compatibility with the Cloud Run environment.

4. Resource Limits:

Each container instance in Cloud Run comes with specific resource limits, including CPU and memory. Developers need to be aware of these constraints and optimize their applications accordingly. Striking a balance between resource utilization and application functionality is essential for efficient and effective deployment on Cloud Run. Continuous monitoring and adjustment of resource allocation become imperative to maintain optimal performance.

While Google Cloud Run offers a powerful and flexible serverless environment, understanding and addressing these challenges is crucial for successfully leveraging its capabilities. By proactively considering and mitigating these factors, developers can optimize their applications for a seamless and efficient deployment on Google Cloud Run.

Conclusion

In the ever-evolving landscape of cloud computing, Google Cloud Run stands out as a powerful and user-friendly platform for deploying containerized applications. Its serverless nature, auto-scaling capabilities, and seamless integration with other GCP services make it an attractive choice for developers looking to build scalable and efficient solutions.

As we celebrate the one-year anniversary of Google Cloud Run, it’s clear that the platform has made significant strides in empowering developers and businesses to embrace containerization without the complexity of managing infrastructure. Whether you’re building microservices, web applications, RESTful APIs, or handling background jobs, Cloud Run offers a versatile and cost-effective solution for a wide range of use cases.

Cloud Run supports any stateless HTTP containerized application. It is designed for web services, APIs, and microservices. The container should be stateless, as instances can be started and stopped at any time.

Billing for Cloud Run is based on the number of requests and the compute resources (CPU and memory) used. The pricing is flexible, as you only pay for the resources your container consumes during request processing.

While Cloud Run is optimized for stateless HTTP containers, it can be adapted for other use cases, such as background tasks. However, keep in mind that it is best suited for applications that can be triggered by incoming HTTP requests.

Cloud Run automatically scales the number of container instances up or down based on incoming request traffic. This ensures that your application can handle varying loads efficiently without manual intervention.

Yes, you can deploy multiple services within a single Cloud Run deployment. Each service can be independently scaled and managed. This allows for a modular and scalable approach to building applications.