Introduction to AWS Serverless API Development

Serverless architecture is revolutionizing the way we build and deploy applications, offering a paradigm shift from traditional server-based models. In this dynamic landscape, AWS Serverless API development emerges as a key player, leveraging the advantages of serverless computing for modern application needs.

Overview of Serverless Architecture

Serverless architecture, often referred to as Function as a Service (FaaS), allows developers to build and run applications without managing the underlying server infrastructure. In the traditional model, developers had to provision and scale servers to handle varying workloads. However, with serverless, the cloud provider manages server infrastructure, and developers focus solely on writing code in the form of functions.

The benefits of serverless architecture include:

- Cost-Effectiveness: Serverless computing follows a pay-as-you-go model, where you only pay for the actual compute resources used during the execution of functions. This eliminates the need to provision and maintain idle servers, resulting in cost savings.

- Scalability: Serverless applications can automatically scale in response to demand. Each function runs independently, allowing the system to handle multiple concurrent executions effortlessly. This ensures optimal performance, especially during traffic spikes.

- Ease of Development: Developers can solely focus on writing code and defining functions without worrying about server provisioning, maintenance, or scaling. This accelerates the development cycle, enabling faster time-to-market for applications.

- Reduced Operational Overhead: Serverless architectures abstract away much of the operational overhead associated with managing servers. This includes tasks like server maintenance, security patching, and infrastructure scaling, freeing up resources for innovation.

Importance of Serverless API in Modern Applications

Serverless APIs play a crucial role in shaping modern applications, offering several advantages that align with the needs of today’s development landscape.

- Scalability: Serverless APIs can effortlessly scale to handle varying levels of traffic, ensuring a seamless experience for users regardless of the application’s popularity or demand fluctuations.

- Cost-Effectiveness: By eliminating the need to maintain and scale servers continuously, serverless APIs help organizations optimize costs. Developers only pay for the actual compute resources consumed during API executions.

- Rapid Deployment: The serverless model allows developers to deploy changes quickly, promoting agility in the development process. Updates to APIs can be rolled out without downtime or disruptions to end-users.

- Flexibility and Agility: Serverless APIs provide the flexibility to choose the programming languages and frameworks that best suit the application’s requirements. This agility allows developers to experiment with different technologies and iterate on solutions rapidly.

In summary, AWS Serverless API development introduces a transformative approach to building applications, focusing on efficiency, scalability, and flexibility.

Key Components of Serverless API

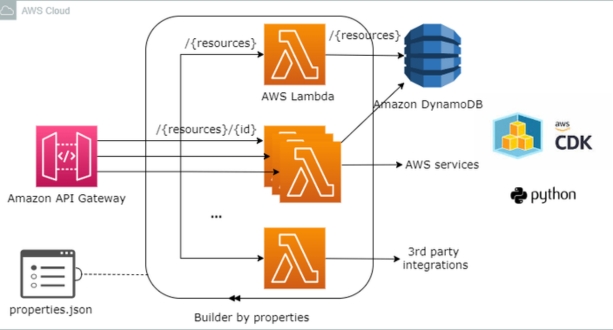

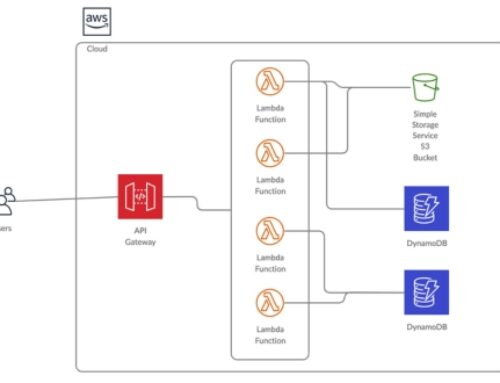

Building a robust Serverless API on AWS involves understanding and leveraging key components that seamlessly work together to deliver scalable, cost-effective, and high-performance solutions.

AWS Lambda Functions

AWS Lambda is at the core of serverless computing, allowing developers to run code without provisioning or managing servers. When it comes to Serverless API development, Lambda functions play a pivotal role in handling specific tasks and business logic. These functions are designed to execute in response to events triggered by changes to data, HTTP requests, or other custom events.

Lambda functions are designed to be stateless and event-driven, responding to various triggers such as changes in data, updates, or HTTP requests. Developers can write code in supported programming languages (such as Python, Node.js, or Java), and Lambda automatically scales the execution in response to the incoming workload. This flexibility and scalability make Lambda functions ideal for handling the dynamic nature of API requests.

Amazon API Gateway

Amazon API Gateway complements Lambda functions by providing a fully managed service for creating, publishing, maintaining, monitoring, and securing APIs at any scale. It acts as the entry point for clients, managing the traffic and ensuring seamless communication between users and backend services.

API Gateway simplifies the process of creating and maintaining APIs, offering features like:

- Endpoint Configuration: Developers can define API endpoints and their corresponding HTTP methods, mapping them to specific Lambda functions or other backend services.

- Authorization and Authentication: API Gateway allows for fine-grained control over who can access the API by providing authentication and authorization mechanisms. This ensures that only authenticated users or systems can interact with the serverless API.

- Request and Response Transformation: API Gateway supports the transformation of requests and responses, enabling data manipulation to meet the requirements of both clients and backend services.

- Rate Limiting and Throttling: To control API usage and prevent abuse, API Gateway provides mechanisms for rate limiting and throttling, ensuring fair usage of resources.

AWS DynamoDB

DynamoDB is a serverless NoSQL database that seamlessly integrates with Serverless API development. It offers low-latency and scalable storage for both structured and unstructured data, making it an ideal choice for serverless architectures.

- Scalable Storage: DynamoDB automatically scales based on the workload, handling the storage needs of serverless applications without manual intervention.

- Event-Driven Triggers: Developers can configure DynamoDB Streams to capture changes to the database and trigger Lambda functions, allowing for real-time processing of data changes.

- Fully Managed: DynamoDB is fully managed by AWS, meaning developers do not need to worry about provisioning or managing servers. This aligns with the serverless philosophy of reducing operational overhead.

By combining Lambda functions, Amazon API Gateway, and AWS DynamoDB, developers can create a powerful and scalable Serverless API that efficiently meets the demands of modern applications. These components work cohesively to provide a seamless and flexible development experience while optimizing resource usage and costs.

Setting Up Your Serverless API

Building a Serverless API involves a series of intricate steps, from creating Lambda functions to configuring API Gateway endpoints and integrating with DynamoDB. Let’s delve into each step to guide you through the process of setting up your Serverless API on AWS.

Creating Lambda Functions

Lambda functions are the heart of your Serverless API, handling specific tasks and business logic. Here’s a comprehensive guide on creating and deploying Lambda functions:

- Development Environment Setup: Begin by setting up your preferred development environment and AWS CLI for seamless interaction with AWS services.

- Creating a Lambda Function: Use the AWS Management Console or your preferred deployment tool to create a new Lambda function. Define the function’s runtime, execution role, and other configuration settings.

- Code Implementation: Write the code for your Lambda function, ensuring it aligns with the desired functionality of your Serverless API.

- Testing Locally: Before deployment, test your Lambda function locally to identify and resolve any issues.

- Deploying the Function: Use the deployment tool to upload your function code to AWS Lambda. Once deployed, the function is ready to respond to API requests.

Creating Lambda functions involves a mix of configuration, coding, and testing. The serverless nature of Lambda ensures automatic scaling, allowing your functions to handle varying workloads efficiently.

Configuring API Gateway Endpoints

API Gateway acts as the entry point for clients, managing traffic and directing requests to the appropriate Lambda functions. Configuring API Gateway involves several crucial steps:

- Creating an API: Use the API Gateway console to create a new API, defining the name and resource paths for your endpoints.

- Creating Resources and Methods: Structure your API by creating resources (e.g., /users) and defining HTTP methods (GET, POST) associated with each resource.

- Connecting to Lambda Functions: Configure each API method to connect to the corresponding Lambda function. This establishes the link between API Gateway and your serverless backend.

- Enabling CORS: If needed, configure Cross-Origin Resource Sharing (CORS) settings to allow your API to be accessed by web applications from different domains.

API Gateway simplifies the process of defining, managing, and securing APIs. The visual interface allows for easy configuration of endpoints, and the integration with Lambda functions streamlines the development workflow.

Integrating with DynamoDB

DynamoDB serves as the serverless NoSQL database for your API, providing scalable and low-latency storage. Let’s explore the steps to integrate your Serverless API with DynamoDB:

- Creating a DynamoDB Table: Use the DynamoDB console to create a table, defining the table name and primary key attributes.

- Configuring IAM Roles: Ensure that your Lambda functions have the necessary IAM roles and permissions to interact with DynamoDB.

- Implementing Data Access Functions: Write Lambda functions that interact with DynamoDB, implementing functions to read, write, and update data.

- Testing Database Interactions: Test the integration between your Lambda functions and DynamoDB to verify data interactions.

DynamoDB seamlessly integrates with Lambda functions, providing a fully managed, serverless database solution. The combination of API Gateway, Lambda, and DynamoDB creates a cohesive ecosystem for developing robust and scalable Serverless APIs on AWS.

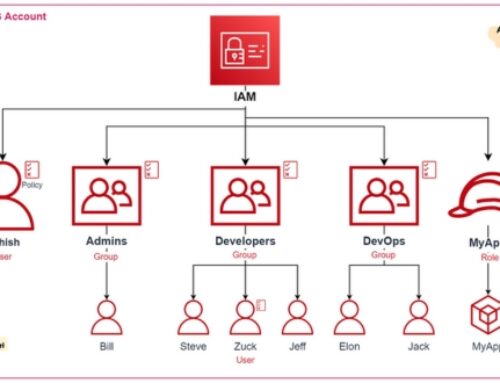

Authentication and Authorization in Serverless API

Securing your Serverless API is paramount to protect sensitive data and ensure proper access control. This involves implementing robust authentication mechanisms in API Gateway and setting up role-based access control (RBAC). Let’s explore these aspects in detail:

Securing API Gateway Endpoints

- API Key Authentication:

- Overview: API keys act as a simple yet effective method to authenticate clients accessing your API.

- Implementation: Configure API Gateway to require API keys for specific endpoints, ensuring that only clients with valid keys can make requests.

- Best Practices: Rotate API keys regularly to enhance security, and monitor key usage to detect and mitigate any suspicious activities.

- IAM Authentication:

- Overview: Leveraging AWS Identity and Access Management (IAM) for authentication provides a more robust and flexible authentication mechanism.

- Implementation: Integrate IAM with API Gateway, allowing you to define fine-grained access controls for individual AWS services.

- Best Practices: Use IAM roles to grant permissions to AWS resources, providing secure access to Lambda functions and other backend services.

Implementing API key and IAM authentication ensures that only authorized clients can interact with your Serverless API. While API keys offer simplicity, IAM authentication provides a more comprehensive and customizable approach, aligning with AWS’s security best practices.

Role-Based Access Control (RBAC)

- Configuring Roles in IAM:

- Overview: RBAC allows you to control access based on user roles, ensuring that each user has the appropriate permissions.

- Implementation: Create IAM roles for different user roles (e.g., admin, user), defining the specific actions they can perform.

- Best Practices: Follow the principle of least privilege, granting users the minimum permissions required for their tasks to enhance security.

- Mapping Roles to API Gateway:

- Overview: Once IAM roles are defined, map them to API Gateway to control access to specific endpoints.

- Implementation: Configure API Gateway to use IAM roles for authorization, ensuring that users are only allowed to perform authorized actions.

- Best Practices: Regularly review and update role mappings to adapt to changes in your application’s access requirements.

RBAC enhances the security of your Serverless API by ensuring that users have precisely the permissions they need. Mapping IAM roles to API Gateway provides a centralized and manageable approach to access control, aligning with security best practices and compliance standards.

In conclusion, a robust authentication and authorization setup is crucial for the security and integrity of your Serverless API. The combination of API key authentication and IAM-based RBAC creates a comprehensive security framework, safeguarding your API against unauthorized access and potential security threats.

Testing and Debugging Serverless APIs

Ensuring the reliability and performance of your Serverless API requires a comprehensive testing and debugging strategy. Let’s delve into the key aspects of testing Lambda functions and debugging in the Serverless environment.

Unit Testing Lambda Functions

- Strategies for Testing:

- Input/Output Validation: Test Lambda functions with various input scenarios, validating the correctness of output.

- Exception Handling: Evaluate how functions handle unexpected inputs or errors, ensuring robust error handling mechanisms.

- Concurrency Testing: Simulate multiple concurrent invocations to assess the scalability and performance under load.

- Testing Frameworks:

- AWS SAM Local: Utilize AWS SAM Local for local testing, allowing you to simulate Lambda function invocations on your development machine.

- Serverless Framework: Leverage the Serverless Framework to facilitate easy testing and deployment of Lambda functions.

- Mocking Dependencies:

- Mock external dependencies, such as databases or API calls, to isolate and test specific Lambda functions independently.

- Use tools like Sinon.js or AWS SDK Mock to simulate AWS service responses during testing.

Unit testing Lambda functions is crucial for identifying and rectifying issues at an early stage. By testing various scenarios, validating input/output, and simulating real-world conditions, you can ensure the reliability of individual functions within your Serverless API.

Debugging in Serverless Environment

- Logging and Monitoring:

- Implement detailed logging within Lambda functions to capture relevant information during execution.

- Utilize AWS CloudWatch Logs for centralized logging and monitoring of function executions.

- Tracing with AWS X-Ray:

- Integrate AWS X-Ray for distributed tracing, allowing you to visualize and understand the flow of requests across different components.

- Identify bottlenecks, errors, and latency issues by tracing the execution path of requests.

- Remote Debugging:

- Leverage remote debugging tools for Lambda functions to troubleshoot issues during runtime.

- Connect IDEs like Visual Studio Code or IntelliJ to Lambda functions for real-time debugging.

Debugging in a Serverless environment requires a combination of logging, monitoring, and advanced tools. By implementing robust logging practices and leveraging AWS X-Ray for tracing, you can gain insights into the execution flow and performance of your Serverless API. Additionally, the ability to perform remote debugging facilitates the identification and resolution of issues without disrupting the production environment.

In conclusion, thorough testing and effective debugging practices are integral to maintaining the reliability and performance of your Serverless API. By incorporating unit testing strategies for Lambda functions and employing advanced debugging tools in the Serverless environment, you can identify and address potential issues proactively, ensuring a seamless and robust API experience for users.

Optimizing Serverless API Performance

Ensuring optimal performance for your Serverless API is essential to deliver a responsive and efficient user experience. Let’s explore strategies for mitigating cold starts and implementing caching and throttling mechanisms.

Cold Start Mitigation

- Proactive Warming:

- Implement scheduled, lightweight invocations of your Lambda functions to keep them warm.

- Utilize AWS CloudWatch Events or external services to trigger periodic invocations.

- Optimizing Package Size:

- Minimize the size of your Lambda deployment packages by excluding unnecessary dependencies.

- Consider using layers to separate common dependencies and reduce the size of individual functions.

- Concurrency Scaling:

- Adjust the concurrency settings of your Lambda functions based on expected workloads.

- Use AWS Auto Scaling to dynamically manage the number of concurrent executions.

Mitigating cold starts is crucial for maintaining low-latency responses in Serverless APIs. By proactively warming up functions, optimizing package sizes, and dynamically scaling concurrency, you can minimize the impact of cold starts and ensure a consistently responsive API.

Caching and Throttling

- Implementing Caching:

- Utilize caching mechanisms, such as AWS Elasticache or in-memory caching within Lambda functions, to store frequently accessed data.

- Define cache expiration policies to balance data freshness and performance.

- Request Throttling:

- Implement throttling policies in API Gateway to control the rate of incoming requests.

- Use AWS WAF (Web Application Firewall) to set up rate limiting and protect against potential abuse.

- Fine-tuning Throttling Settings:

- Adjust throttling settings based on the nature of your API and expected traffic patterns.

- Monitor and analyze API usage to identify opportunities for adjusting throttling thresholds.

Caching and throttling are essential strategies for optimizing Serverless API performance. By implementing effective caching mechanisms, you can reduce the need for repeated computations and enhance response times. Throttling helps control incoming traffic, preventing overloads and ensuring a consistent quality of service for users.

In conclusion, optimizing the performance of your Serverless API involves addressing challenges such as cold starts, implementing caching strategies, and fine-tuning throttling settings. By adopting proactive measures to mitigate cold starts and implementing efficient caching and throttling mechanisms, you can deliver a high-performing and reliable Serverless API for your users.

Monitoring and Logging in Serverless API

Efficient monitoring and logging practices are vital for maintaining the health and performance of your Serverless API. Let’s delve into integrating AWS CloudWatch for monitoring Lambda functions and explore best practices for logging.

AWS CloudWatch Integration

- Metrics and Alarms:

- Configure CloudWatch to collect essential metrics from Lambda functions, such as execution duration, error rates, and invocation counts.

- Establish CloudWatch Alarms to receive notifications for predefined threshold breaches, enabling proactive issue resolution.

- X-Ray Tracing:

- Enable AWS X-Ray for distributed tracing, allowing you to visualize and analyze the flow of requests across your Serverless API components.

- Gain insights into performance bottlenecks and latency issues using X-Ray traces.

Logging Best Practices

- Structured Logging:

- Adopt structured logging formats to enhance the readability and searchability of logs.

- Include relevant contextual information in log entries, such as request IDs and timestamps.

- Centralized Log Storage:

- Direct Lambda function logs to CloudWatch Logs for centralized storage and easy retrieval.

- Leverage CloudWatch Logs Insights for efficient log analysis with queries.

Integrating AWS CloudWatch provides a comprehensive solution for monitoring Lambda functions. By configuring metrics, alarms, and leveraging X-Ray for tracing, you can gain actionable insights into the performance and behavior of your Serverless API.

Logging best practices, including structured logging and centralized storage, contribute to effective debugging and optimization. CloudWatch Logs becomes a valuable tool for analyzing logs, diagnosing issues, and improving the overall reliability of your Serverless API.

In summary, robust monitoring and logging practices, coupled with CloudWatch integration and X-Ray tracing, empower you to proactively manage the performance and troubleshoot potential issues in your Serverless API.

Scaling Serverless APIs

Efficient scaling is crucial to accommodate varying workloads and ensure optimal performance for your Serverless API. Explore strategies for auto-scaling Lambda functions and handling unexpected traffic spikes.

Auto-Scaling Lambda Functions

- Dynamic Scaling:

- Implement auto-scaling policies in AWS Lambda to dynamically adjust the number of concurrent function executions based on demand.

- Set appropriate scaling thresholds and triggers to ensure responsive scaling in response to varying workloads.

Handling API Traffic Spikes

- Proactive Capacity Planning:

- Anticipate potential traffic spikes by analyzing historical data and trends.

- Implement proactive capacity planning to scale resources ahead of anticipated demand, minimizing the impact of sudden increases in traffic.

- Caching Strategies:

- Utilize caching mechanisms to offload repetitive requests and reduce the strain on serverless resources.

- Implement intelligent caching strategies to enhance response times during traffic spikes.

Auto-scaling Lambda functions is a fundamental aspect of optimizing resource utilization in a Serverless API environment. By configuring dynamic scaling policies, you enable your infrastructure to automatically adjust to varying levels of demand, ensuring optimal performance and cost-effectiveness.

Handling API traffic spikes requires a combination of proactive planning and reactive measures. Proactively analyze historical data to predict potential spikes and implement caching strategies to mitigate the impact. This approach ensures that your Serverless API can seamlessly handle sudden increases in traffic while maintaining responsiveness.

In conclusion, mastering the art of scaling in Serverless API development involves dynamic auto-scaling configurations and a proactive approach to traffic management. These strategies empower your Serverless API to gracefully handle fluctuations in demand, providing a scalable and resilient architecture.

Serverless API Best Practices

Optimize your Serverless API development with industry-proven best practices that focus on efficient architecture design and robust security considerations.

Designing for Serverless Architecture

- Microservices Approach:

- Embrace a microservices architecture to enhance scalability, maintainability, and flexibility in your Serverless API.

- Break down functionalities into smaller, independent components to simplify development and deployment.

- Event-Driven Design:

- Leverage event-driven patterns to enable seamless communication between different components in a serverless environment.

- Design APIs to respond to events, promoting decoupling and asynchronous communication.

Security Considerations

- Data Encryption:

- Implement end-to-end encryption to ensure data integrity and confidentiality in transit and at rest.

- Leverage AWS Key Management Service (KMS) for robust encryption key management.

- Access Control Policies:

- Define fine-grained access control policies using AWS Identity and Access Management (IAM) to restrict permissions based on roles and responsibilities.

- Regularly audit and update access policies to align with evolving security requirements.

Event-driven design principles play a pivotal role in serverless architectures. Embrace these patterns to enable components to communicate seamlessly through events, fostering decoupling and asynchronous communication. This approach aligns with the serverless paradigm, where functions respond to events triggered by various sources.

Security is paramount in a serverless environment. Implement robust data encryption practices, ensuring end-to-end protection for sensitive information. Utilize access control policies through AWS IAM to enforce least privilege principles, safeguarding your Serverless API against unauthorized access and potential security threats.

In conclusion, adopting best practices in designing and securing your Serverless API is integral to achieving optimal performance, scalability, and resilience in a serverless architecture.

Future Trends in Serverless API Development

Stay ahead of the curve by exploring the exciting future trends and emerging technologies in the ever-evolving landscape of serverless computing.

Emerging Technologies

- Edge Computing Integration:

- Witness the convergence of serverless computing and edge computing, enabling faster response times and reduced latency for distributed applications.

- Containerization Compatibility:

- Explore the synergy between serverless and containerization technologies, offering enhanced deployment flexibility and resource isolation.

- Machine Learning Integration:

- Experience the seamless integration of serverless platforms with machine learning services, allowing developers to build intelligent, data-driven applications effortlessly.

- Event-Driven Real-Time Architectures:

- Embrace the evolution of event-driven architectures, facilitating real-time processing and enabling applications to respond dynamically to events.

As the serverless landscape continues to evolve, these emerging technologies promise to shape the future of serverless API development, opening up new possibilities for innovation and efficiency. Stay tuned for a dynamic and transformative journey into the world of serverless computing.

Containerization compatibility is another area gaining prominence, as serverless platforms explore synergies with container technologies. This integration provides developers with enhanced deployment flexibility and the benefits of resource isolation.

Machine learning integration is set to revolutionize serverless development by seamlessly blending serverless platforms with machine learning services. This fusion empowers developers to build intelligent, data-driven applications without the complexities associated with traditional setups.

As these emerging technologies converge, the future of serverless API development promises a dynamic and transformative journey, unlocking new avenues for innovation and efficiency in application development. Stay abreast of these trends to harness the full potential of serverless computing in your projects.

Conclusion: Unleashing the Power of Serverless API Development

Embark on a revolutionary journey in application development with the transformative capabilities of serverless API development. As we conclude this exploration, it’s evident that serverless architecture offers unparalleled advantages, from enhanced scalability and cost-effectiveness to simplified deployment and maintenance.

By adopting serverless principles and leveraging AWS Lambda functions, Amazon API Gateway, and DynamoDB, developers can streamline the creation and management of APIs, paving the way for agile and efficient application development.

With an emphasis on security, performance optimization, and future trends, the possibilities for innovation are boundless. As serverless API development continues to evolve, embracing emerging technologies like edge computing, containerization compatibility, and machine learning integration becomes paramount for staying at the forefront of the industry.

In this dynamic landscape, the future trends in serverless computing hold promises of even greater efficiency and flexibility. Whether it’s mitigating cold start impacts, optimizing performance, or designing for serverless architectures, the journey into the world of serverless API development is one of continual discovery and advancement.

Unleash the power of serverless computing to revolutionize your development processes, empower your teams, and propel your applications into the future of cloud-native innovation.