Introduction to Containerization and Kubernetes

Containerization: Packaging Apps for the Cloud

Imagine shipping fragile goods without proper packaging. It’s risky and inefficient. Containerization solves this for software development by packaging applications with all their dependencies (libraries, code, configurations) into standardized units called containers.

Think of these containers as insulated, portable shipping boxes. Each container runs independently, ensuring consistent behavior regardless of the underlying infrastructure. This offers several benefits:

- Faster development: Build and deploy apps quicker by isolating them from environment variations.

- Scalability: Easily scale applications up or down by spinning up or down new containers.

- Resource efficiency: Containers share the host operating system, minimizing resource usage compared to virtual machines.

- Portability: Run your containers across different environments (cloud, on-premises) without modification.

Kubernetes: The Container Conductor

Managing hundreds or thousands of containers can be chaotic. Enter Kubernetes, the open-source platform that automates deployment, scaling, and management of containerized applications. Think of it as the conductor of a vast container orchestra.

Here’s how Kubernetes simplifies container management:

- Automatic deployment: Deploy new versions of your application with ease and rollbacks in case of issues.

- Self-healing: Kubernetes automatically restarts failed containers or scales resources during spikes in demand.

- High availability: Distribute your applications across multiple nodes for redundancy and fault tolerance.

- Orchestration: Manage complex workflows and services spanning multiple containers.

AWS: Your Cloud-Based Container Hub

While Kubernetes is open-source, managing it yourself can be resource-intensive.

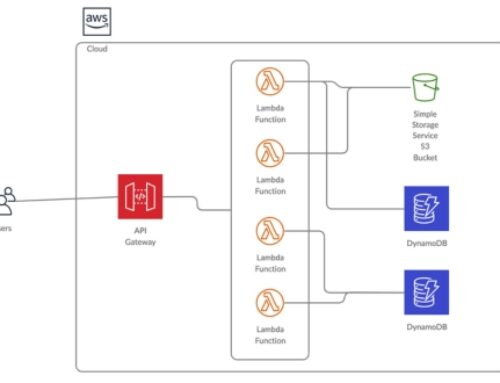

This is where Amazon Web Services (AWS) comes in, offering a suite of services and tools to simplify containerization and Kubernetes usage:

- Amazon Elastic Container Service (ECS): A managed service for running and scaling containerized applications on AWS.

- Amazon Elastic Kubernetes Service (EKS): A managed service for running and scaling Kubernetes clusters on AWS.

- Amazon ECR (Elastic Container Registry): A secure, private registry for storing and managing container images.

- AWS Fargate: Serverless compute engine for running containers without managing servers.

These services enable you to:

- Focus on app development: Leverage managed services to delegate infrastructure management.

- Scale seamlessly: Automatically scale your containerized applications based on demand.

- Benefit from security and compliance: Utilize AWS’s robust security features and compliance certifications.

- Reduce costs: Pay only for the resources you use with pay-as-you-go pricing models.

By combining containerization with Kubernetes and AWS, you can achieve:

- Faster time to market: Deploy and iterate on your applications rapidly.

- Operational efficiency: Simplify management and focus on core business logic.

- Scalability and agility: Adapt to changing demands and grow your applications seamlessly.

- Reduced costs: Optimize resource utilization and pay only for what you use.

Getting Started with AWS Containerization

Dive into AWS ECS: Your Managed Container Orchestrator

Ready to unleash the power of containerized applications on AWS? Look no further than Amazon Elastic Container Service (ECS), a fully-managed orchestration service that takes care of the heavy lifting. Let’s dive into its features and how to get started:

What is ECS?

ECS simplifies container management by automating tasks like deployment, scaling, and load balancing. Think of it as your personal conductor, ensuring your containerized applications run smoothly and efficiently.

Benefits of using ECS:

- Focus on your code, not infrastructure: Delegate management to ECS and spend more time building great applications.

- Scalability on demand: Easily scale your containerized applications up or down based on traffic or resource needs.

- High availability and fault tolerance: Distribute your applications across multiple nodes for resilience and uptime.

- Integration with other AWS services: Leverage the vast ecosystem of AWS services for storage, networking, and more.

Getting started with ECS:

- Choose your deployment model: ECS offers two options – Fargate (serverless) or EC2 (managed instances).

- Create a task definition: Define your container image, resources, and environment variables.

- Create a cluster: This is where your containers will run. You can choose from various configurations based on your needs.

- Launch your service: Deploy your task definition to your cluster and watch your application come to life!

Go Serverless with AWS Fargate: Containers Without the Servers

Imagine running containers without managing servers! That’s the magic of AWS Fargate, a serverless compute engine for containers.

Benefits of using Fargate:

- No server management: Focus on your application logic and leave the infrastructure to Fargate.

- Automatic scaling: Fargate scales your containers automatically based on demand, ensuring optimal resource utilization.

- Cost-effective: Pay only for the resources your containers use, eliminating idle server costs.

- Faster deployments: Deploy your applications quickly without worrying about server provisioning.

Use cases for Fargate:

- Microservices architectures: Run each microservice in its own Fargate container for scalability and isolation.

- Batch processing: Run batch jobs like data analysis or image processing efficiently.

- Event-driven applications: Respond to events like API calls or database changes in real-time with Fargate.

Getting started with Fargate:

- Choose your launch type: Select Fargate as your launch type while creating a task definition in ECS.

- Specify your container image and resources: Define the same elements as with EC2 launches.

- Deploy your service: Launch your Fargate-powered service and enjoy the serverless container experience.

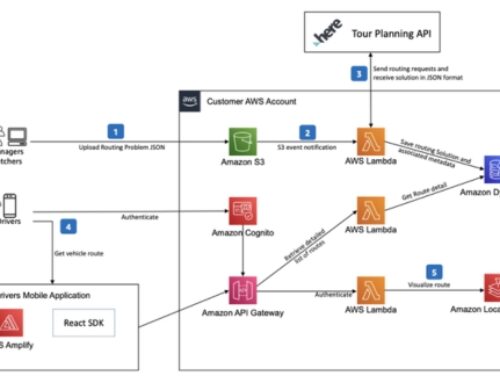

Master the Kubernetes Symphony with AWS EKS

Want the full power of Kubernetes without the management burden? Enter Amazon Elastic Kubernetes Service (EKS), a managed Kubernetes service by AWS.

What is EKS?

EKS provides a highly scalable and secure platform for running Kubernetes clusters on AWS. It handles the heavy lifting of cluster provisioning, patching, and maintenance, freeing you to focus on managing your applications.

Benefits of using EKS:

- Flexibility and control: Leverage the full power of Kubernetes for advanced container orchestration.

- Integration with AWS services: Seamlessly integrate your Kubernetes clusters with other AWS services like S3, DynamoDB, and more.

- High availability and fault tolerance: EKS automatically manages cluster health and ensures your applications are always available.

- Security and compliance: Benefit from AWS’s robust security features and compliance certifications.

Getting started with EKS:

- Create a cluster: Specify the desired cluster configuration, including node type and size.

- Access your cluster: Use the kubectl command-line tool to interact with your cluster and manage your applications.

- Deploy your applications: Deploy your containerized applications using standard Kubernetes tools and methodologies.

Kubernetes Essentials

Demystifying Kubernetes Essentials

Welcome to the world of Kubernetes! Let’s unpack its core concepts and empower you to navigate this container orchestration powerhouse.

Unveiling the Building Blocks: Kubernetes Architecture

Think of your Kubernetes cluster as a bustling city with various structures serving specific purposes. Here are some key components you’ll encounter:

- Pods: The basic unit of deployment, encapsulating one or more containers sharing resources. Imagine them as multi-tenant buildings housing different companies.

- Deployments: Manage the desired state of your Pods, ensuring they run with the specified number of replicas. Think of them as blueprints for building and maintaining specific types of structures in your city.

- Services: Expose your application across the network, enabling communication with the outside world. Imagine them as public transportation systems connecting different areas of your city.

- ConfigMaps: Store and manage configuration data in a key-value pair format, accessible to your application containers. Think of them as digital libraries containing vital information for businesses within your city.

- Namespaces: Create logical groupings of resources within a cluster, isolating applications and improving security. Imagine them as distinct districts within your city, each with its own set of infrastructure and regulations.

This is just a glimpse into the Kubernetes ecosystem. Its richness lies in the interplay of these components, enabling sophisticated container orchestration.

Deploying Applications: Turning Code into Action

Ready to bring your containerized applications to life with Kubernetes? Buckle up! Here’s a simplified overview of the deployment process:

- Create a manifest file: This YAML file defines your application’s desired state, specifying container images, resource requirements, and configurations. Think of it as an architectural plan for your application’s deployment.

- Apply the manifest: Use the kubectl command-line tool to submit your plan to the Kubernetes cluster. Imagine submitting your building permits and plans to the city authorities.

- Kubernetes takes action: Based on your plan, Kubernetes creates Pods, launches containers, and ensures your application runs according to your specifications. Imagine construction crews executing your building plan, bringing your vision to reality.

Remember, this is a high-level view. Depending on your application complexity, you might utilize additional resources like Services and Ingress Controllers for advanced networking configurations.

Scaling Your City: Scaling and Load Balancing in Kubernetes

As your application’s reach grows, you need to adapt. Kubernetes offers scaling and load balancing mechanisms to ensure smooth performance:

- Horizontal scaling: Increase the number of Pods running your application to distribute the workload, like adding more buildings to cater to a growing population.

- Vertical scaling: Allocate more resources (CPU, memory) to existing Pods for demanding tasks, like upgrading specific buildings with better infrastructure.

Kubernetes can intelligently automate these scaling decisions based on predefined rules or real-time metrics. Additionally, Services and Load Balancers distribute traffic across your Pods, ensuring no single building gets overloaded.

Advanced Kubernetes Concepts

Congratulations! You’ve grasped the Kubernetes essentials. Now, let’s explore some advanced concepts to unlock its full potential:

Managing State with StatefulSets: Beyond Stateless Containers

While containers are typically stateless, some applications, like databases and message queues, maintain state. Kubernetes offers StatefulSets to manage these applications effectively.

StatefulSets in action:

- Guarantee Pod identity: Each Pod in a StatefulSet has a unique identifier and network identity, ensuring data consistency. Imagine each Pod as a dedicated server with a specific role in your application.

- Persistent storage: StatefulSets leverage Persistent Volumes (PVs) to store application data outside of the container’s ephemeral storage, ensuring data persists even if Pods are recreated. Think of PVs as external storage units where your servers access and store their data.

- Ordered deployment and scaling: StatefulSets ensure Pods are created and scaled in a specific order, maintaining application state consistency. Imagine your servers coming online and scaling in a controlled manner to avoid data corruption.

Navigating the Network Labyrinth: Kubernetes Networking Models

Container networking within a cluster can be complex. Kubernetes offers different models to suit various needs:

- Pod network: Each Pod has its own IP address, enabling direct communication between Pods within the same network namespace. Think of it as a local network within your city, connecting different buildings.

- Service network: Services provide an abstraction layer, exposing your application through a single IP address, regardless of which Pod handles the traffic. Imagine this as a public IP address for your city, accessible from the outside world.

- Overlay network: This advanced model creates a virtual network on top of the physical network, providing greater flexibility and isolation for pods. Imagine this as a dedicated network infrastructure within your city, separate from the public network.

Choosing the right model depends on your application’s communication needs and security requirements.

Fortifying Your Cluster: Kubernetes Security Best Practices

Securing your Kubernetes cluster is crucial. Here are some essential practices:

- Role-Based Access Control (RBAC): Implement RBAC to define who can access and perform actions within your cluster, similar to granting specific permissions to different residents within your city.

- Network policies: Restrict network traffic between Pods and external resources, like firewalls controlling who can access specific buildings within your city.

- Pod security policies: Define security context for Pods, including resource limits and security profiles, similar to building codes and security protocols for different structures in your city.

- Secrets management: Secure sensitive information like passwords and API keys using Kubernetes Secrets, like storing them in a secure vault within your city.

Remember, security is an ongoing process. Continuously monitor your cluster, update software, and stay informed about emerging threats to ensure your city remains secure.

Integrating AWS Services with Kubernetes

Having established your Kubernetes expertise, let’s explore its seamless integration with powerful AWS services to unlock even greater potential:

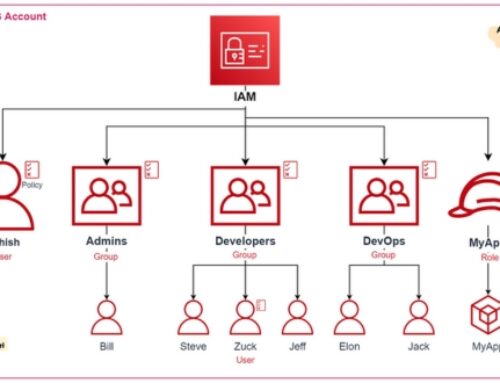

AWS IAM: Fine-grained Access Control for Your Cluster

Imagine managing access to your city’s resources through a central authority. That’s what AWS Identity and Access Management (IAM) does for your Kubernetes cluster. Here’s how it integrates:

- IAM Roles for Pods: Assign IAM roles to Pods, granting them specific permissions to access AWS resources like S3 buckets or DynamoDB tables. Imagine giving specific buildings in your city access to specific utilities or data centers.

- IAM Policies Define Access: Define fine-grained policies within IAM that control what resources and actions each role can access. Think of these as detailed regulations outlining how each building can utilize city resources.

- Kubernetes Service Account Integration: Map Kubernetes Service Accounts to IAM roles, enabling Pods to seamlessly assume those roles and access AWS resources. Imagine buildings in your city having designated access cards to utilize specific services.

Integrating IAM with Kubernetes ensures secure and controlled access to AWS resources, boosting security and compliance for your containerized applications.

AWS VPC: Secure Network Connectivity for Your City

Picture connecting different areas of your city with secure, isolated pathways. That’s what AWS Virtual Private Cloud (VPC) provides for your Kubernetes cluster:

- Connecting Cluster Pods to VPC: Configure your cluster to reside within a specific VPC, ensuring Pods have private IP addresses and controlled network access. Imagine buildings in your city having designated addresses and private roads for internal communication.

- Subnet Placement and Security Groups: Place Pods in specific VPC subnets and apply security groups to control inbound and outbound traffic. Think of these as designated zones and security checkpoints within your city’s network.

- External Load Balancers and Gateways: Expose your application through VPC endpoints or external load balancers, providing controlled access from the outside world. Imagine having specific gates and public transportation systems connecting your city to external areas.

Integrating VPC with Kubernetes enables secure network isolation, controlled communication, and robust access control for your applications running within your AWS environment.

Monitoring and Logging in Kubernetes

Effective monitoring and logging are crucial for maintaining a healthy and performing Kubernetes cluster. Let’s explore some key tools and strategies:

Watching Your City Thrive: Kubernetes Monitoring Tools

Imagine having a network of sensors and dashboards to monitor your city’s infrastructure and resource utilization. That’s what Kubernetes monitoring tools offer:

- Prometheus: This popular open-source tool collects metrics from your cluster components (Pods, nodes, etc.) and stores them in a time-series database. Think of it as a central data collection center gathering information from various sensors across your city.

- Grafana: This visualization tool allows you to create insightful dashboards displaying your Prometheus data in real-time. Imagine having interactive dashboards presenting key metrics on energy consumption, traffic flow, and resource usage across your city.

- Heapster and Metrics Server: These built-in tools provide basic monitoring capabilities, offering a starting point for smaller deployments. Think of them as basic monitoring systems providing essential data on your city’s overall health.

These tools work together to provide comprehensive insights into your cluster’s health, enabling you to identify and address issues proactively.

Logging the City’s Story: Strategies for Kubernetes Logging

Just like recording events in your city, logging captures the activities within your Kubernetes cluster. Here are some effective strategies:

- Fluentd: This log aggregator collects logs from various sources (Pods, containers) and centralizes them for further processing. Imagine it as a central logging hub gathering reports from different buildings and departments within your city.

- Elasticsearch and Kibana: This powerful duo stores and analyzes your logs, allowing you to search, filter, and visualize them for insights. Think of it as a vast digital library and analysis platform for all your city’s historical records and ongoing activity logs.

- Application-specific logging: Integrate your application’s logging with the cluster-wide logging system to gain a holistic view of application behavior and potential issues. Think of it as collecting reports from individual citizens and officials to understand the overall well-being of your city.

By implementing these strategies, you can gain valuable insights into your application’s behavior, identify errors, and track the overall health of your Kubernetes environment.

Best Practices for Containerization and Kubernetes on AWS

Now that you’ve explored the key concepts, let’s delve into best practices to optimize your containerization and Kubernetes journey on AWS:

Infrastructure as Code: Build Your City with Automation

Imagine having a blueprint for your city, allowing you to easily create and modify its infrastructure. That’s what Infrastructure as Code (IaC) brings to your Kubernetes environment. Tools like Terraform and AWS CloudFormation let you define your infrastructure in code, enabling:

- Declarative configuration: Specify desired infrastructure state (e.g., cluster resources, security groups) instead of manual configuration, simplifying management and reducing errors.

- Version control and reproducibility: Track your infrastructure changes like code, allowing easy rollbacks and consistent deployments across environments.

- Automation and scaling: Automate infrastructure provisioning and scaling based on your needs, ensuring elasticity and cost efficiency.

Adopting IaC empowers you to manage your infrastructure efficiently and consistently, just like having a well-defined blueprint guides the development and growth of your city.

CI/CD Pipelines: Delivering Applications at the Speed of Light

Imagine having a well-oiled system that automatically builds, tests, and deploys your application updates to your city. That’s the power of CI/CD (Continuous Integration/Continuous Delivery) pipelines for containerized applications:

- Continuous integration: Automate code building and testing upon every change, ensuring code quality and identifying issues early. Think of it as having automated construction crews that constantly test the integrity of new structures before integrating them into your city.

- Continuous delivery: Automate deployment of tested code to your Kubernetes cluster, enabling frequent updates and faster time to market. Imagine having a dedicated transportation system that seamlessly delivers new construction materials to the building sites.

- Integration with Kubernetes: Integrate your CI/CD pipeline with tools like ArgoCD or Helm to automate deployments and manage application configurations within your cluster. Think of these tools as your city’s traffic control system, ensuring smooth delivery and configuration of new infrastructure components.

Implementing CI/CD pipelines streamlines your development process and accelerates application delivery, making your city more responsive and adaptable to changing needs.

Cost Optimization: Keeping Your City Budget Balanced

Managing your cloud resources efficiently is crucial. Here are some strategies for optimizing costs with containerization and Kubernetes on AWS:

- Right-sizing your instances: Choose appropriate instance types based on your application’s resource requirements, avoiding overprovisioning. Think of using smaller, more efficient buildings instead of oversized structures that consume unnecessary resources.

- Leveraging Spot Instances: Utilize AWS Spot Instances for cost-effective workloads that can tolerate interruptions. Imagine utilizing temporary construction crews for short-term projects, reducing overall labor costs.

- Autoscaling based on demand: Implement auto scaling mechanisms to automatically adjust resources based on traffic or application needs, avoiding idle resources. Think of dynamically adjusting the number of construction workers based on the current construction phase, optimizing resource allocation.

- Monitoring and cost analysis: Utilize monitoring tools to track resource consumption and identify cost optimization opportunities. Analyze your spending patterns and identify areas for improvement, just like analyzing your city’s budget to identify potential savings.

By following these best practices, you can ensure your containerized applications run efficiently and cost-effectively on AWS, optimizing your cloud resources and maximizing your ROI.

Conclusion: Harnessing the Power of AWS Containerization and Kubernetes

Embracing AWS Containerization and Kubernetes unleashes a transformative power in modern application development and deployment. The seamless integration of AWS services with container orchestration platforms facilitates unparalleled scalability, flexibility, and efficiency.

By utilizing AWS ECS and Fargate, developers can effortlessly manage containers without the burden of infrastructure management. AWS EKS further extends this capability, providing a fully-managed Kubernetes service for orchestrating containerized workloads at scale.

Understanding Kubernetes essentials, from its architecture to application deployment and scaling, empowers organizations to harness the full potential of this open-source platform. Advanced concepts such as handling stateful workloads, implementing robust networking, and ensuring security best practices contribute to a resilient and secure Kubernetes environment.

Integrating AWS services with Kubernetes amplifies the capabilities of both ecosystems. IAM integration enhances access control, while VPC connectivity ensures secure communication within the AWS infrastructure. Monitoring tools like Prometheus and Grafana, coupled with logging strategies, provide insights for optimizing and troubleshooting Kubernetes clusters effectively.

Adopting best practices, such as Infrastructure as Code (IaC), CI/CD pipeline integration, and cost optimization strategies, streamlines the development and deployment lifecycle. As organizations navigate the ever-evolving landscape of containerization, Kubernetes on AWS emerges as a dynamic force, propelling innovation, scalability, and reliability.

By embracing these technologies and practices, businesses can confidently navigate the complexities of modern application development, ensuring a resilient and future-ready infrastructure that aligns with the dynamic needs of the digital era. AWS Containerization and Kubernetes signify not just a solution but a transformative journey towards a more agile, scalable, and efficient future.