Introduction to AWS EKS

Definition and Overview of AWS EKS:

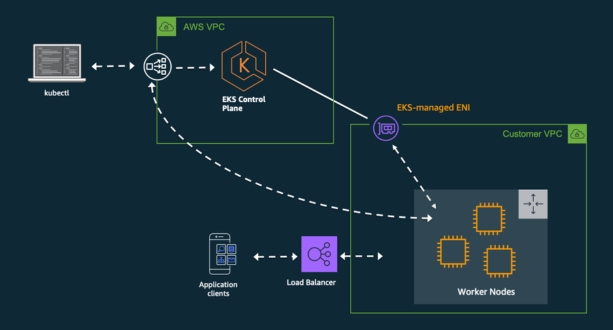

Amazon Elastic Kubernetes Service (EKS) is a managed Kubernetes service offered by Amazon Web Services (AWS). It simplifies the process of deploying, managing, and scaling Kubernetes clusters in the AWS cloud environment. With EKS, users can leverage the power of Kubernetes without worrying about the complexities of managing the underlying infrastructure.

Evolution of Container Orchestration:

Containerization revolutionized software development by packaging applications and their dependencies into lightweight, portable containers. However, as the number of containers grew, managing and orchestrating them efficiently became a challenge. This led to the evolution of container orchestration platforms, which automate the deployment, scaling, and management of containerized applications across a cluster of nodes.

Role of Kubernetes in Container Management:

Kubernetes emerged as the de facto standard for container orchestration due to its robust features and active community support. It provides a platform-agnostic solution for deploying and managing containerized applications at scale. Some key features of Kubernetes include:

- Automated Deployment: Kubernetes allows users to define the desired state of their applications using YAML or JSON manifests, and it takes care of deploying and maintaining the application in that desired state.

- Scaling: Kubernetes enables horizontal scaling of applications by adding or removing instances based on resource utilization or custom metrics.

- Self-healing: Kubernetes continuously monitors the health of applications and automatically restarts or replaces unhealthy containers to ensure high availability.

- Service Discovery and Load Balancing: Kubernetes provides built-in mechanisms for service discovery and load balancing, allowing applications to communicate with each other seamlessly.

- Resource Management: Kubernetes efficiently allocates compute resources such as CPU and memory to containers based on their resource requests and limits.

Understanding Kubernetes

Core Concepts:

Pods: Pods are the smallest deployable units in Kubernetes. They are groups of one or more containers (such as Docker containers) that share the same network and storage and are scheduled together on the same host.

Services: Services provide a consistent way to access and consume applications deployed in Kubernetes pods. They abstract away the underlying pod IP addresses and provide load balancing, service discovery, and other networking functionalities.

Deployments: Deployments are a Kubernetes resource used to manage the lifecycle of pods and replica sets. They enable declarative updates to applications by defining desired state and handling the deployment and scaling of application instances.

ReplicaSets: ReplicaSets ensure that a specified number of pod replicas are running at any given time. They help in scaling the number of pod instances up or down based on demand and ensure high availability of applications.

Benefits of Kubernetes Orchestration:

Scalability: Kubernetes enables effortless scaling of applications by allowing dynamic allocation of resources based on demand. It can scale pods horizontally (adding more instances) or vertically (increasing resources for existing instances).

High Availability: Kubernetes automates the process of ensuring high availability by managing pod replicas and distributing them across multiple nodes. It can automatically restart failed pods and reschedule them onto healthy nodes.

Portability: Kubernetes provides a consistent platform for deploying, managing, and scaling containerized applications across different environments, including on-premises data centers, public clouds, and hybrid clouds.

Resource Efficiency: Kubernetes optimizes resource utilization by packing multiple containers onto nodes efficiently. It also provides features like resource quotas and limits to prevent resource wastage and ensure fair allocation.

Challenges in Managing Kubernetes Clusters:

Complexity: Kubernetes has a steep learning curve due to its complex architecture and multitude of concepts. Managing clusters requires understanding various components such as pods, services, deployments, and networking configurations.

Resource Management: Efficiently managing resources in Kubernetes clusters can be challenging, especially in large-scale deployments. It requires continuous monitoring, tuning, and optimization to prevent resource contention and ensure optimal performance.

Networking and Connectivity: Setting up and managing networking and connectivity in Kubernetes clusters can be complex, particularly in multi-cluster or hybrid-cloud environments. Issues such as network overlay configuration, service discovery, and load balancing require careful consideration.

Security: Kubernetes introduces new security challenges, such as securing container images, managing access controls, and securing communication between cluster components. Ensuring compliance, enforcing policies, and detecting and mitigating vulnerabilities are critical aspects of Kubernetes security.

Getting Started with AWS EKS

Setting up an EKS Cluster:

- Start by logging into your AWS Management Console and navigating to the EKS service.

- Create a new EKS cluster by specifying details such as the region, Kubernetes version, instance types for worker nodes, and networking configuration.

- You can choose to use AWS CloudFormation or excel, the official command-line tool for EKS, to automate the cluster creation process.

- Once the cluster is created, you’ll receive the necessary configuration details such as the Kubernetes API server endpoint and authentication credentials.

Integrating with AWS Services:

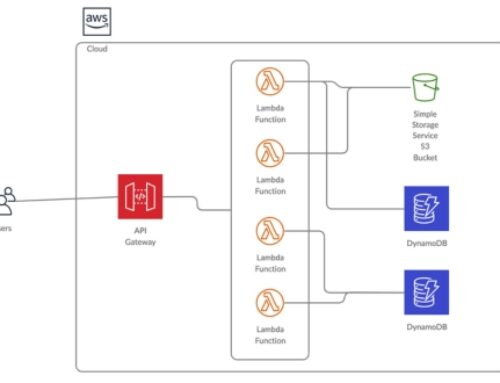

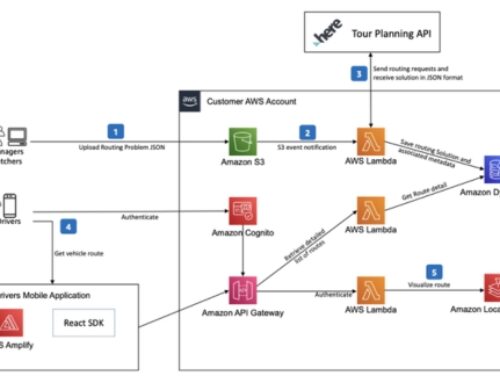

- EKS integrates seamlessly with various AWS services to enhance the capabilities of your Kubernetes workloads.

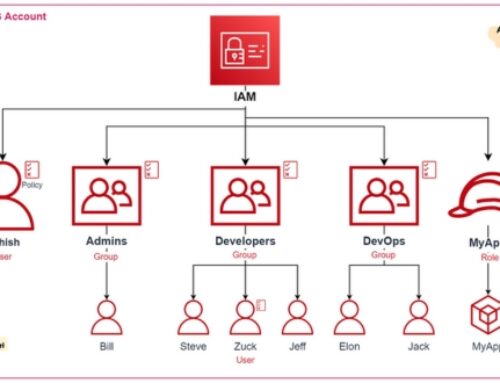

- AWS Identity and Access Management (IAM) can be used to control access to your EKS clusters and resources.

- Amazon ECR (Elastic Container Registry) provides a secure and scalable repository for storing Docker container images, which can be easily integrated with EKS for deploying applications.

- Amazon RDS (Relational Database Service), Amazon S3 (Simple Storage Service), Amazon DynamoDB, and other AWS database and storage services can be used as backend data stores for applications running on EKS.

- AWS CloudWatch and AWS X-Ray can be used for monitoring and troubleshooting your EKS clusters and applications.

Security Best Practices:

- Implement least privilege access control using IAM roles and policies to restrict access to EKS resources.

- Utilize AWS VPC (Virtual Private Cloud) to isolate your EKS clusters from other network resources and apply security groups and network ACLs to control inbound and outbound traffic.

- Enable encryption at rest and in transit for data stored and transmitted by your EKS applications using services like AWS Key Management Service (KMS) and AWS Certificate Manager.

- Regularly update and patch your EKS clusters and worker nodes to mitigate security vulnerabilities.

- Implement Kubernetes RBAC (Role-Based Access Control) to control access to Kubernetes resources within your clusters.

- Use AWS CloudTrail to log API calls and AWS Config to assess, audit, and evaluate the configurations of your EKS clusters for compliance with security best practices.

Managing EKS Clusters

Scaling EKS Clusters:

- Horizontal Scaling: EKS allows you to horizontally scale your Kubernetes clusters by adding or removing worker nodes dynamically based on the workload requirements. You can configure autoscaling groups to automatically adjust the number of nodes in the cluster based on metrics like CPU or memory utilization.

- Vertical Scaling: Additionally, you can vertically scale individual nodes within the cluster by adjusting their instance type or size to handle increased resource demands of specific workloads.

Monitoring and Logging:

- Monitoring: Monitoring EKS clusters involves tracking various metrics such as CPU utilization, memory usage, network traffic, and cluster health. AWS provides integration with Amazon CloudWatch, which allows you to monitor EKS clusters and their underlying resources effectively. You can set up custom dashboards, alarms, and metrics to gain insights into the performance and health of your clusters.

- Logging: Logging is crucial for troubleshooting issues and maintaining the security of your EKS clusters. EKS supports integration with services like Amazon CloudWatch Logs and Amazon Elasticsearch Service (Amazon ES) for centralized logging. You can configure logging for Kubernetes components, containers, and applications running on the cluster to capture events, errors, and other important information.

High Availability and Disaster Recovery:

- High Availability: Ensuring high availability involves designing your EKS clusters with redundancy and fault tolerance to minimize downtime and ensure continuity of operations. This includes deploying multiple Availability Zones (AZs) within a region, distributing worker nodes across AZs, and configuring Kubernetes components such as the control plane and etcd for resilience.

- Disaster Recovery: Disaster recovery strategies aim to protect your EKS clusters and their data in the event of catastrophic failures or outages. This may involve implementing backup and restore mechanisms for critical data, replicating clusters across different regions, and establishing procedures for failover and recovery.

Cost Optimization in AWS EKS

- Understanding Pricing Models: AWS EKS pricing is based on several factors, including the EC2 instances powering the worker nodes, EKS control plane costs, data transfer fees, storage costs, and any additional services or features utilized within the EKS environment. It’s essential to have a clear understanding of how these factors contribute to the overall cost of running EKS clusters.

- Cost Management Strategies: Implementing cost management strategies involves optimizing resource utilization, rightsizing instances, leveraging auto-scaling capabilities, utilizing spot instances for non-production workloads, and monitoring and optimizing data transfer costs. By closely monitoring resource usage and making adjustments accordingly, you can ensure that you’re not overspending on unnecessary resources.

- Reserved Instances and Savings Plans: Reserved Instances (RIs) and Savings Plans are purchasing options offered by AWS that provide significant discounts compared to on-demand pricing. With RIs, you commit to a specific instance type in a particular region for a one- or three-year term, in exchange for a discounted hourly rate. Savings Plans offer similar savings but provide more flexibility by allowing you to commit to a certain amount of usage in dollars per hour across any instance family in a region, without needing to specify instance types or platforms upfront. Both RIs and Savings Plans can lead to substantial cost savings for predictable workloads or steady-state applications.

By combining a thorough understanding of AWS EKS pricing models with effective cost management strategies and the strategic use of RIs and Savings Plans, organizations can optimize their costs while still benefiting from the scalability, flexibility, and reliability of Kubernetes on AWS. Regular monitoring and adjustments are key to ensuring ongoing cost optimization as workload requirements evolve over time.

Deployment Strategies on AWS EKS

Blue-Green Deployments:

- In a blue-green deployment, you maintain two identical production environments, typically referred to as blue and green.

- At any given time, only one of these environments serves live traffic (let’s say blue).

- When a new version of your application is ready to deploy, you deploy it to the green environment.

- After thorough testing and verification in the green environment, you switch the router or load balancer to direct traffic to the green environment instead of the blue environment, making the new version live.

- If any issues arise, you can quickly switch back to the blue environment, ensuring minimal downtime and risk to end-users.

Canary Deployments:

- Canary deployments are a more gradual approach to rolling out changes to production.

- In this strategy, you deploy the new version of your application to a small subset of your infrastructure or user base, often referred to as the canary group or canary users.

- You monitor the performance and behavior of the new version in the canary environment. If it performs well without any issues, you gradually increase the percentage of traffic directed to this new version.

- If issues are detected, you can quickly roll back the deployment for the canary group, minimizing the impact on your overall user base.

Rolling Updates:

- Rolling updates involve gradually replacing instances of the old version of your application with instances of the new version.

- In the context of AWS EKS, Kubernetes manages rolling updates by incrementally updating pods within a deployment.

- Kubernetes ensures that a certain number of pods are available and healthy during the update process, preventing downtime or service interruption.

- The rolling update strategy allows for a smooth transition from the old version to the new version while maintaining application availability.

Securing AWS EKS Workloads

Identity and Access Management (IAM) Integration:

- IAM integration involves controlling access to AWS resources and services. With EKS, IAM roles are used to grant permissions to Kubernetes service accounts, which in turn control access to AWS resources.

- Implement least privilege principles by assigning only necessary permissions to users, roles, and service accounts.

- Utilize IAM roles for service accounts (IRSA) to grant fine-grained permissions to pods running in your EKS clusters, reducing the need for IAM credentials within the cluster.

- Regularly review and audit IAM policies to ensure they align with security best practices and organizational policies.

Network Security Best Practices:

- Implement network policies using tools like Calico or Amazon VPC CNI (Container Network Interface) to control inbound and outbound traffic between pods.

- Utilize AWS security groups and network ACLs to restrict traffic to and from EKS clusters.

- Enable encryption in transit using Transport Layer Security (TLS) for communication within the cluster and between clients and the cluster.

- Implement strong authentication mechanisms for accessing the Kubernetes API server, such as mutual TLS (mTLS) authentication.

- Regularly monitor network traffic within the cluster for any anomalies or unauthorized access attempts.

Compliance and Regulatory Considerations:

- Understand and adhere to relevant compliance standards such as GDPR, HIPAA, SOC 2, etc., based on the nature of your workloads and data being processed.

- Implement controls and safeguards to ensure data confidentiality, integrity, and availability.

- Maintain audit logs for activities within the cluster and ensure they are stored securely and accessible for compliance audits.

- Regularly conduct vulnerability assessments and penetration testing to identify and remediate security weaknesses.

- Stay informed about changes in compliance requirements and adjust security measures accordingly.

Integrating AWS EKS with CI/CD Pipelines

Integrating AWS EKS (Elastic Kubernetes Service) with CI/CD (Continuous Integration/Continuous Deployment) pipelines is a crucial step in modern software development practices, especially for applications deployed on Kubernetes clusters.

AWS EKS (Elastic Kubernetes Service): AWS EKS is a fully managed Kubernetes service provided by Amazon Web Services. It allows you to easily deploy, manage, and scale containerized applications using Kubernetes on AWS infrastructure. With EKS, you can run Kubernetes without needing to install, operate, and maintain your Kubernetes control plane or worker nodes.

CI/CD Pipelines:

- Continuous Integration (CI): CI is a development practice where developers integrate their code changes into a shared repository frequently (ideally multiple times a day). Each integration triggers automated builds and tests to detect integration errors early.

- Continuous Deployment (CD): CD extends CI by automatically deploying all code changes to a testing or production environment after the build stage. It ensures that code changes are consistently and safely deployed to the target environment.

Automation with AWS CodePipeline and CodeBuild:

- AWS CodePipeline: AWS CodePipeline is a fully managed continuous integration and continuous delivery service that automates the build, test, and deployment phases of your release process. It allows you to define a series of stages and actions to model your release process, including source code repositories, build systems, testing frameworks, and deployment targets.

- AWS CodeBuild: AWS CodeBuild is a fully managed build service that compiles source code, runs tests, and produces deployable artifacts. It allows you to build your code in a containerized environment based on Docker images. CodeBuild integrates seamlessly with CodePipeline to automate the build process within your CI/CD pipelines.

Version Control and Continuous Deployment:

- Version Control: Version control systems such as Git are essential for tracking changes to your codebase, enabling collaboration among developers, and ensuring the integrity of your code. By integrating version control with CI/CD pipelines, developers can trigger automated builds and deployments based on code changes committed to the repository.

- Continuous Deployment: Continuous Deployment automates the deployment of code changes to production environments after passing through the CI process. It allows organizations to release new features and bug fixes rapidly while maintaining a high level of confidence in the stability and reliability of their applications.

Integrating AWS EKS with CI/CD pipelines involves automating the build, test, and deployment processes using AWS services like CodePipeline and CodeBuild. This integration enables teams to deliver software changes to Kubernetes clusters efficiently and reliably, fostering a culture of continuous delivery and innovation.

Advanced Topics in AWS EKS

Autoscaling Workloads:

Autoscaling in AWS EKS allows you to dynamically adjust the number of containers running in your Kubernetes cluster based on workload demands. This ensures optimal resource utilization and maintains application performance under varying levels of traffic. In AWS EKS, autoscaling can be achieved through Kubernetes Horizontal Pod Autoscaler (HPA) and Cluster Autoscaler.

- Horizontal Pod Autoscaler (HPA): This Kubernetes feature automatically adjusts the number of replicas of a pod based on observed CPU or custom metrics. In AWS EKS, you can configure HPA to scale pods within your cluster horizontally, adding or removing instances as needed to maintain predefined performance metrics.

- Cluster Autoscaler: This component automatically adjusts the size of your Kubernetes cluster by adding or removing worker nodes based on the resource demands of your pods. In AWS EKS, you can enable Cluster Autoscaler to dynamically scale the underlying EC2 instances in your cluster to accommodate increased workload demands.

Container Networking:

Container networking in AWS EKS refers to the network configuration and communication between containers running within your Kubernetes cluster. Efficient networking is crucial for ensuring that containers can communicate with each other, access external resources, and maintain security. AWS EKS supports various networking options, including Amazon VPC CNI (Container Networking Interface) and third-party CNI plugins like Calico and Weave.

- Amazon VPC CNI: This is the default networking mode for AWS EKS clusters. It enables each pod in your cluster to have its own IP address within the VPC (Virtual Private Cloud) network, allowing for secure and isolated communication between pods.

- Third-party CNI Plugins: AWS EKS allows you to integrate with third-party CNI plugins like Calico or Weave, which offer additional networking features such as network policies for fine-grained control over traffic flow and security within your Kubernetes cluster.

Customizing Kubernetes Control Plane:

The Kubernetes control plane consists of various components responsible for managing the cluster’s state, scheduling workloads, and maintaining overall cluster health. In AWS EKS, while the control plane is managed by AWS, you have the ability to customize certain aspects to suit your specific requirements.

- Custom Authentication and Authorization: AWS EKS allows you to integrate with external identity providers (IdPs) for authentication, such as AWS IAM (Identity and Access Management), OIDC (OpenID Connect), or LDAP (Lightweight Directory Access Protocol). You can also configure RBAC (Role-Based Access Control) policies to control access to cluster resources based on user roles and permissions.

- Custom Add-ons and Controllers: You can deploy custom add-ons and controllers to extend the functionality of your Kubernetes control plane. This includes deploying monitoring and logging solutions, service meshes, or custom controllers for managing specialized workloads or resources within your cluster.

Conclusion

AWS EKS provides a scalable platform for deploying and managing containerized applications using Kubernetes. Kubernetes itself is renowned for its scalability, allowing you to effortlessly scale your applications up or down based on demand. With AWS EKS, you can leverage AWS’s vast infrastructure to scale your Kubernetes clusters horizontally by adding or removing worker nodes as needed.

With AWS EKS, Amazon manages the Kubernetes control plane for you, including upgrades and patches, while you focus on managing your applications and worker nodes. Self-managed Kubernetes requires you to handle all aspects of cluster management, including control plane maintenance.

The key components of AWS EKS include the control plane, which manages the Kubernetes API server, etc, and other control plane components, and worker nodes, which are EC2 instances that run your containerized applications.

AWS EKS allows you to scale your cluster by adding or removing worker nodes dynamically using the AWS Management Console, CLI, or API. It also supports autoscaling based on metrics such as CPU and memory usage.

AWS EKS pricing includes charges for the EC2 instances running your worker nodes, as well as additional charges for EKS control plane resources and any other AWS services you use in conjunction with EKS.

Yes, AWS EKS is compatible with standard Kubernetes APIs and tools, so you can use tools like kubectl, Helm, and Kubernetes Dashboard with EKS clusters.