Introduction

In the era of big data, organizations are constantly seeking efficient ways to process and analyze vast amounts of data to derive valuable insights. Google Cloud Platform (GCP) provides a powerful and scalable solution for data processing in the form of Google Cloud Dataflow. In this comprehensive guide, we will delve into the intricacies of Google Cloud Dataflow, exploring its key features, architecture, use cases, and best practices.

Understanding Google Cloud Dataflow

What is Google Cloud Dataflow?

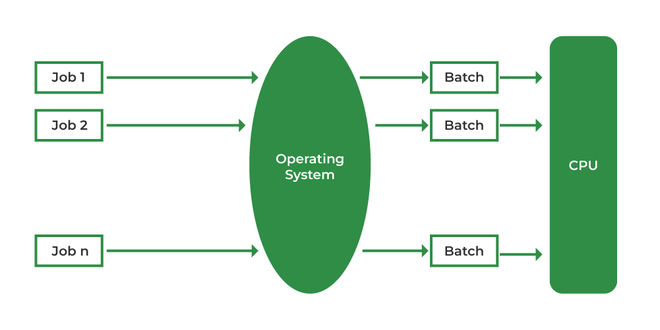

Google Cloud Dataflow stands as a sophisticated, fully managed service tailored for both stream and batch processing of data. This service empowers developers to craft, deploy, and execute data processing pipelines efficiently and at scale. A pivotal aspect of Google Cloud Dataflow is its foundation on Apache Beam, an open-source model that unifies the approach to both batch and streaming data processing.

Key Features

- Unified Model: One of the standout features of Google Cloud Dataflow is its provision of a unified programming model for both batch and stream processing. This unified approach simplifies the development process, allowing developers to seamlessly transition between processing modes without the need for a significant shift in their coding paradigm.

- Managed Service: Google Cloud Dataflow operates as a fully managed service, relieving developers of the burdens associated with infrastructure management. It takes charge of tasks such as scaling and optimization, freeing up developers to focus their efforts on crafting effective data processing logic rather than delving into the intricacies of infrastructure management.

- Serverless: A distinctive characteristic of Dataflow is its serverless nature. Developers are spared the need to provision or manage servers manually. The service dynamically scales based on the workload at hand, providing a serverless experience where resources are automatically allocated and deallocated, aligning with the processing demands.

- Integration with GCP Services: Google Cloud Dataflow seamlessly integrates with other Google Cloud Platform (GCP) services, fostering a comprehensive and interconnected data processing ecosystem. Integration with prominent GCP services like BigQuery, Cloud Storage, and Pub/Sub enables a cohesive workflow, allowing users to leverage various tools and services within the GCP suite for a holistic data processing solution. This integration enhances flexibility and efficiency, as data can flow seamlessly between different services within the GCP environment.

Understanding GCP Cloud Monitoring

1.1 Overview of GCP Cloud Monitoring

Google Cloud Platform’s (GCP) Cloud Monitoring is a comprehensive solution strategically designed to offer visibility into the performance and health of applications and services deployed on the Google Cloud infrastructure. This monitoring tool provides users with a unified and cohesive experience, empowering them to seamlessly collect, analyze, and visualize data originating from various sources. By leveraging GCP Cloud Monitoring, organizations gain insights that are instrumental in optimizing their applications, enhancing reliability, and ensuring the efficient operation of services within the Google Cloud environment.

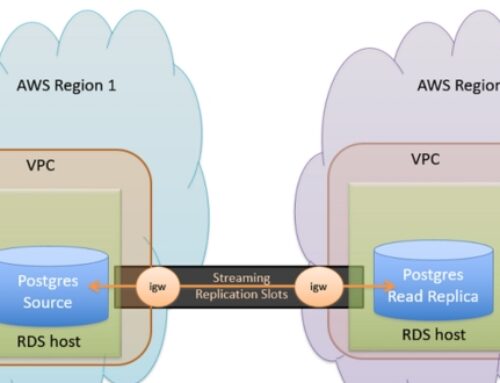

Architecture of Google Cloud Dataflow

Components of Dataflow Pipeline

Google Cloud Dataflow, a fully managed service for stream and batch processing, is built around three fundamental components within its pipeline structure:

- Source: This defines the point from which the pipeline retrieves its data.

- Transform: Represents the operations or processing applied to the data within the pipeline.

- Sink: Specifies the destination where the processed data is written to, completing the data flow.

These components collectively shape the flow and behavior of the pipeline, allowing developers to create tailored data processing workflows.

Workflow Execution

Dataflow orchestrates the execution of pipelines through a meticulous two-step process:

- Pipeline Construction: Developers employ the Apache Beam SDK to write code that outlines the pipeline’s structure, including its data source, transformations, and data sink.

- Pipeline Execution: Once constructed, Dataflow optimizes the execution plan, distributing it across multiple worker nodes. The system adeptly manages the entire lifecycle of pipeline execution, ensuring scalability and efficiency.

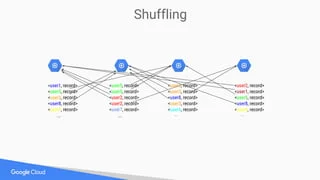

Dataflow Shuffle

Central to understanding Dataflow’s processing mechanics is the con

Central to understanding Dataflow’s processing mechanics is the concept of “Dataflow Shuffle.” This term encapsulates the redistribution of data across worker nodes during the execution of a pipeline. Efficient management of data shuffling is pivotal for optimizing overall performance, enabling Dataflow to process and analyze large datasets with agility and speed. This strategic handling of data redistribution is a cornerstone in achieving optimal performance and

resource utilization within the Google Cloud Dataflow environment.

Writing Dataflow Pipelines

Apache Beam SDK

In the realm of Google Cloud Dataflow, the key tool for crafting data pipelines is the Apache Beam Software Development Kit (SDK). This SDK serves as a versatile framework supporting multiple programming languages, such as Java, Python, and Go. This flexibility enables developers to articulate intricate data processing logic in a manner that is both concise and scalable. The Apache Beam SDK serves as the backbone, facilitating the creation of robust and efficient data workflows within the Google Cloud environment.

Transformations and PCollection

At the heart of Dataflow pipelines lie transformations, acting as the fundamental building blocks. Transformations delineate the operations to be executed on the data within the pipeline. Complementing this, the concept of PCollection, or Pipeline Collection, comes into play. PCollection represents a distributed dataset that undergoes transformations as part of the pipeline’s progression. Together, these elements provide the means to structure and execute complex data manipulations in a streamlined and efficient manner.

Windowing and Triggers

Efficient data processing often necessitates considerations for time-sensitive scenarios, especially in streaming data contexts. Windowing and triggers emerge as critical components in this regard. Windowing involves the segmentation of data into time-based or custom-defined windows, allowing for more granular control over processing timelines. Triggers, on the other hand, govern when the data within a window is processed. This interplay between windowing and triggers is essential for ensuring timely and accurate data processing, particularly in dynamic and evolving data environments.

Monitoring and Debugging with Google Cloud Platform (GCP)

Dataflow Monitoring Console

Google Cloud Dataflow offers a robust Monitoring Console as a key feature for developers and administrators. This console serves as a central hub for monitoring the execution of data pipelines. Users gain valuable insights into job progress, resource utilization, and the identification of potential bottlenecks. This feature is essential for maintaining optimal performance and efficiency in data processing workflows. Through the Monitoring Console, developers can track the real-time status of their jobs and make informed decisions to enhance overall system performance.

Logging and Error Handling

Effective logging and error handling are fundamental components of any data processing system, and Google Cloud Dataflow excels in these aspects. The platform seamlessly integrates with Google Cloud Logging, providing developers with detailed logs for each job. These logs offer a comprehensive view of the pipeline’s execution, making it easier to troubleshoot and debug potential issues.

Proper error handling is crucial for maintaining the reliability of data processing pipelines, and Dataflow provides robust mechanisms to gracefully manage errors. By leveraging these capabilities, developers can ensure the smooth operation of their data processing workflows, minimizing downtime and enhancing overall system resilience. The integration of logging and error handling features contributes significantly to the platform’s suitability for building scalable and reliable data processing solutions on the Google Cloud.

Optimization Techniques

Performance Optimization

Performance optimization in the realm of Dataflow pipelines necessitates a nuanced approach, considering a myriad of factors that impact the efficiency of data processing jobs. This chapter delves into the intricacies of enhancing performance, emphasizing key elements such as parallelization, data distribution, and resource utilization. By strategically addressing these aspects, organizations can fine-tune their Dataflow pipelines to operate more effectively, thereby achieving faster data processing and improved overall system performance. The pursuit of performance optimization is integral to maximizing the capabilities of Google Cloud Dataflow, making it crucial for enterprises seeking optimal efficiency in their data processing workflows.

Cost Optimization

In the dynamic landscape of cloud computing, where resource consumption directly translates into costs, efficient resource utilization is paramount. Google Cloud Dataflow provides a suite of options for users to exercise control over their expenditures. This chapter shines a light on cost optimization techniques, emphasizing practices such as selecting appropriate machine types, adjusting the number of worker nodes based on workload, and optimizing data shuffling to minimize resource consumption.

By adopting these strategies, organizations can strike a balance between performance and cost-effectiveness, ensuring that their data processing tasks are not only efficient but also economically sustainable. Cost optimization becomes a strategic imperative for enterprises looking to harness the full potential of cloud resources without unnecessary financial burdens.

User

describe with more paragraphs on these

“Real-world Use Cases

6.1 ETL (Extract, Transform, Load)

Dataflow is widely used for ETL processes, enabling organizations to extract data from various sources, transform it, and load it into a destination for analysis.

6.2 Real-time Analytics

In scenarios requiring real-time analytics, Dataflow processes streaming data, providing timely insights for decision-making.

6.3Machine Learning Pipelines

Integrating Dataflow with machine learning frameworks allows organizations to build end-to-end machine learning pipelines, from data preparation to model training and deployment.”

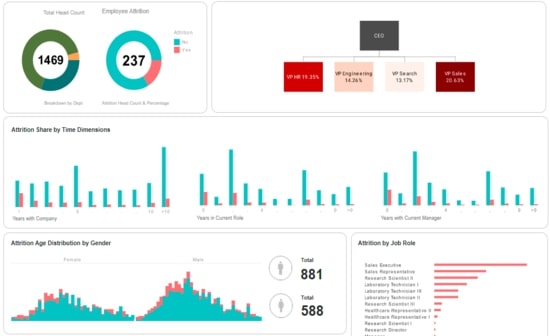

Key Features of GCP Cloud Monitoring:

Metrics and Dashboards:

GCP Cloud Monitoring offers a comprehensive solution for collecting and analyzing a diverse set of metrics related to resource utilization, system performance, and custom application metrics. Users can gain valuable insights into their infrastructure’s health by leveraging customizable dashboards. These dashboards empower users to create visual representations of metrics, making it easier to monitor and understand the performance of various components within their cloud environment.

Alerting and Notification:

Proactive issue identification is crucial for maintaining a robust cloud infrastructure. GCP Cloud Monitoring facilitates this through its alerting and notification features. Users can set up alerts based on predefined conditions, allowing them to be notified promptly when issues arise. The flexibility of integrating with various notification channels, including email, SMS, and third-party services like PagerDuty, ensures that the right people are informed promptly, facilitating quicker response times and issue resolution.

Logging Integration:

Seamless integration with GCP Cloud Logging enhances the monitoring capabilities of GCP Cloud Monitoring. This integration enables users to correlate metric data with log data, providing a more holistic understanding of system behavior. By combining metric and log data, users can investigate issues more effectively, gaining insights into the context and events leading up to performance anomalies or errors.

Real-world Use Cases:

ETL (Extract, Transform, Load):

Google Cloud Dataflow plays a pivotal role in Extract, Transform, Load (ETL) processes. Organizations leverage Dataflow to efficiently extract data from diverse sources, transform it as needed, and seamlessly load it into a destination for further analysis. The scalability and parallel processing capabilities of Dataflow make it a preferred choice for managing large-scale data transformations and integrations in ETL workflows.

Real-time Analytics:

In scenarios where real-time insights are crucial, Dataflow emerges as a powerful tool for processing streaming data. By employing Dataflow for real-time analytics, organizations can derive timely insights from continuously streaming data sources. This capability is particularly valuable in situations where quick decision-making is essential, such as monitoring live events, user interactions, or dynamic market conditions.

Machine Learning Pipelines:

The integration of Dataflow with machine learning frameworks enables organizations to establish end-to-end machine learning pipelines. From the initial stages of data preparation to model training and subsequent deployment, data flow streamlines the entire process. This integration not only enhances the efficiency of machine learning workflows but also ensures a seamless transition from raw data to deploying production-ready machine learning models, contributing to the development and deployment of robust AI applications.

Best Practices

Design Principles

In the realm of Google Cloud’s Dataflow pipelines, adhering to fundamental design principles becomes a linchpin for success. Modularization, simplicity, and reusability emerge as cornerstones in the creation of Dataflow pipelines that are not only functional but also maintainable and scalable.

By compartmentalizing functionalities into modular components, developers pave the way for streamlined maintenance and easier troubleshooting. Embracing simplicity ensures that pipelines remain comprehensible, reducing the likelihood of errors and facilitating collaboration among team members. Furthermore, prioritizing reusability fosters efficiency by enabling the integration of proven components into new pipelines, promoting a consistent and standardized approach to data processing.

Error Handling and Monitoring

The resilience of Dataflow pipelines hinges on robust error-handling mechanisms and vigilant monitoring practices. Recognizing that errors are an inherent part of complex data processing workflows, it becomes imperative to implement strategies that gracefully handle exceptions. Equally crucial is the integration of monitoring tools that provide real-time insights into pipeline performance.

Through proactive monitoring, potential issues can be identified and addressed before they escalate, ensuring the reliability and availability of data processing workflows. This chapter delves into the best practices surrounding error handling and monitoring, emphasizing their pivotal role in sustaining the integrity of Dataflow pipelines.

Security Considerations

Within the realm of data processing, security takes center stage. This chapter explores best practices tailored to secure Dataflow pipelines comprehensively. From access controls that regulate who can interact with pipeline resources to encryption methods safeguarding sensitive data during transit and at rest, the guide addresses the multifaceted nature of security considerations.

Additionally, compliance considerations are explored, ensuring that Dataflow pipelines align with industry standards and regulatory requirements. By integrating these security measures, organizations can confidently deploy and operate Dataflow pipelines, mitigating risks and upholding the confidentiality, integrity, and availability of their data.

Google Cloud Dataflow: A Versatile Solution for Various Use Cases

Google Cloud Dataflow stands out as a powerful and versatile tool within the Google Cloud Platform, offering flexibility and scalability to address a diverse array of use cases. Its ability to handle both real-time and batch processing makes it a valuable asset for organizations seeking efficient data processing solutions.

Real-time Analytics:

One of the prominent use cases for Google Cloud Dataflow is in real-time analytics. Organizations often require the capability to process and analyze streaming data as it arrives, enabling them to make informed decisions based on the most up-to-date information. Dataflow’s architecture facilitates the seamless handling of streaming data, ensuring timely insights for critical decision-making processes.

Batch Processing:

For scenarios where data processing can occur in batches, Dataflow provides an efficient and scalable solution. This proves particularly beneficial for tasks like ETL (Extract, Transform, Load) operations, where large datasets need to be processed systematically. The versatility of Dataflow makes it adaptable to different processing requirements, catering to the needs of organizations dealing with diverse data processing workflows.

Event-Driven Applications:

Dataflow’s capabilities extend to the development of event-driven applications that respond in real-time to incoming events. This is pivotal for applications requiring low-latency responses to user actions or external events. Whether it’s updating a user interface or triggering specific actions based on incoming data, Dataflow empowers the creation of responsive and dynamic applications.

Machine Learning Pipelines:

In the realm of machine learning, Dataflow plays a crucial role in preprocessing and transforming large datasets before feeding them into machine learning models. This integration ensures that data is refined and prepared adequately for the machine learning algorithms, contributing to the overall efficiency and accuracy of the models.

Fraud Detection and Anomaly Detection:

The real-time processing capabilities of Dataflow make it an ideal choice for applications related to fraud detection and anomaly detection across various industries. Its ability to swiftly process and analyze data in real-time enables organizations to detect and respond to unusual patterns or activities promptly. This proves invaluable in sectors where identifying anomalies swiftly is critical to preventing potential threats or fraudulent activities.

Google Cloud Dataflow’s versatility positions it as a go-to solution for organizations with diverse data processing needs, offering a robust framework for real-time analytics, batch processing, event-driven applications, machine learning pipelines, and advanced anomaly detection scenarios.

Conclusion

Google Cloud Dataflow empowers organizations to harness the power of big data by providing a scalable, fully managed, and serverless solution for data processing. From understanding its architecture to writing efficient pipelines and optimizing performance, this comprehensive guide has covered the essential aspects of mastering Dataflow. As organizations continue to navigate the complexities of big data, Google Cloud Dataflow stands as a reliable ally in the quest for actionable insights and data-driven decision-making.