Understanding AWS Cloud Automation

What is Cloud Automation?

Cloud automation refers to the process of using software tools and technologies to automatically manage, provision, configure, and operate cloud-based infrastructure and services. This automation streamlines repetitive tasks, reduces human error, and improves efficiency in managing cloud resources. It involves scripting, orchestrating workflows, and utilizing various tools to achieve seamless deployment, scaling, monitoring, and optimization of cloud resources.

Why AWS Cloud Automation?

Amazon Web Services (AWS) is one of the leading cloud service providers, offering a vast array of services and resources for building and managing applications and infrastructure in the cloud. AWS Cloud Automation is particularly crucial due to the dynamic nature of cloud environments, where resources need to be provisioned, scaled, and managed rapidly to meet fluctuating demands. Automating tasks on AWS can help businesses optimize costs, enhance security, improve agility, and accelerate time-to-market for their products and services.

Key Components of AWS Cloud Automation:

- Infrastructure as Code (IaC): IaC involves managing and provisioning infrastructure using code rather than manual processes. AWS provides services like AWS CloudFormation and AWS CDK (Cloud Development Kit) that allow users to define infrastructure resources declaratively or imperatively, enabling consistent and repeatable deployments.

- Orchestration and Automation Tools: AWS offers various tools for orchestrating and automating workflows and processes. Amazon Step Functions, AWS Lambda, and AWS Batch are examples of services that facilitate workflow automation, event-driven computing, and batch processing.

- Configuration Management: AWS provides tools like AWS Systems Manager, AWS OpsWorks, and AWS AppConfig for managing configurations across distributed systems, automating patching, and ensuring consistency in configurations across instances.

- Continuous Integration/Continuous Deployment (CI/CD): AWS supports CI/CD pipelines for automating the build, test, and deployment processes. Services like AWS CodePipeline, AWS CodeBuild, and AWS CodeDeploy enable developers to automate the deployment of applications and updates, ensuring rapid and reliable delivery.

- Monitoring and Logging: AWS offers services such as Amazon CloudWatch and AWS X-Ray for monitoring and logging cloud resources and applications. These services enable automated alerts, performance monitoring, and troubleshooting, ensuring the reliability and performance of cloud environments.

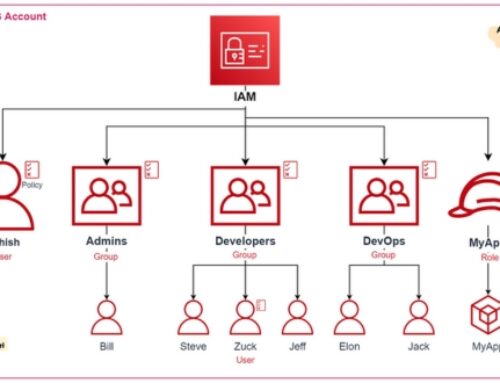

- Security and Compliance Automation: AWS provides tools and services for automating security and compliance tasks, such as AWS Identity and Access Management (IAM), AWS Config, and AWS Security Hub. These services help enforce security best practices, automate security assessments, and maintain compliance with regulatory requirements.

- Cost Management and Optimization: AWS offers services like AWS Cost Explorer, AWS Budgets, and AWS Trusted Advisor for managing and optimizing costs in the cloud. These services provide insights into cloud spending, automate cost allocation, and recommend optimizations to reduce costs and improve resource utilization.

Leveraging AWS CloudFormation for Infrastructure as Code (IaC)

Introduction to Infrastructure as Code (IaC):

Infrastructure as Code (IaC) is an approach to managing infrastructure in a way that mirrors software development practices. Instead of manually configuring servers, networks, and other infrastructure components, IaC allows you to define your infrastructure using code. This code can then be version-controlled, tested, and automated, providing consistency and repeatability in deploying and managing infrastructure.

Benefits of IaC:

The benefits of IaC include increased speed and agility in deploying infrastructure, improved consistency and reliability, reduced risk of errors, better scalability, and cost savings through automation. With IaC, infrastructure provisioning becomes more predictable and manageable, enabling teams to focus on higher-level tasks rather than repetitive manual configurations.

AWS CloudFormation Overview:

AWS CloudFormation is a service provided by Amazon Web Services (AWS) that allows you to define and provision AWS infrastructure as code. CloudFormation uses templates written in either JSON or YAML format to describe the desired state of your AWS resources. These templates can be used to create, update, or delete stacks of AWS resources in a consistent and repeatable manner.

Creating Templates and Stacks:

To use AWS CloudFormation, you create templates that define the resources you want to provision, such as EC2 instances, S3 buckets, or IAM roles. These templates can include parameters for customization and outputs for retrieving information about the provisioned resources. Once you have a template, you can create a stack by providing the template to CloudFormation, which will then handle provisioning and managing the resources defined in the template.

Best Practices for AWS CloudFormation:

Some best practices for using AWS CloudFormation include organizing templates and stacks in a logical manner, using parameters and mappings for flexibility and reusability, leveraging nested stacks for modularization, and using change sets to preview changes before applying them. It’s also important to implement version control for your templates, automate deployments using tools like AWS CodePipeline or AWS SAM, and regularly review and update your templates to keep them current with your infrastructure needs.

Streamlining Workflows with AWS Step Functions

Introduction to AWS Step Functions:

AWS Step Functions is a serverless orchestration service provided by Amazon Web Services (AWS). It allows you to coordinate multiple AWS services into serverless workflows, also known as state machines. With Step Functions, you can build applications that automatically perform tasks in response to events, such as changes in data, user actions, or system state transitions. Step Functions provide a visual interface for designing and organizing workflows, making it easy to define complex business logic without managing infrastructure.

Building State Machines:

State machines in AWS Step Functions define the sequence of steps or tasks that need to be executed in a workflow. Each step in the state machine represents a unit of work, and transitions between steps are based on the outcomes of previous steps. State machines can include various types of tasks, such as AWS Lambda functions, AWS Batch jobs, Amazon ECS tasks, or even custom activities. You can define state machines using the Amazon States Language (ASL), a JSON-based domain-specific language.

Integrating with AWS Services:

AWS Step Functions seamlessly integrates with various AWS services, enabling you to leverage their capabilities within your workflows. For example, you can trigger state machine executions in response to events from services like Amazon S3, Amazon SQS, Amazon SNS, AWS IoT, AWS Glue, etc. Furthermore, you can invoke AWS Lambda functions directly from state machine steps, allowing you to execute custom code as part of your workflow. This tight integration with other AWS services enables you to build powerful and scalable applications.

Monitoring and Debugging Workflows:

AWS Step Functions provides built-in tools for monitoring and debugging workflows. You can track the execution of state machines in real-time using Amazon CloudWatch Logs and Metrics, gaining insights into each step’s progress and performance. Additionally, Step Functions integrates with AWS X-Ray, allowing you to trace and analyze the execution flow across distributed components within your workflows. This visibility helps you identify and troubleshoot errors, optimize performance, and ensure the reliability of your applications.

Real-world Use Cases of AWS Step Functions:

AWS Step Functions can be applied to a wide range of use cases across various industries. Some common examples include:

- Workflow automation: Automate business processes and streamline repetitive tasks, such as order processing, data processing pipelines, or content management workflows.

- Microservices orchestration: Coordinate interactions between microservices in distributed applications, ensuring proper sequencing and error handling.

- ETL (Extract, Transform, Load) pipelines: Build scalable and fault-tolerant data processing pipelines for ingesting, transforming, and loading data from multiple sources into data lakes or data warehouses.

- Serverless application orchestration: Orchestrate serverless functions and services within event-driven architectures, allowing you to build highly responsive and scalable applications.

- DevOps automation: Implement continuous integration and continuous delivery (CI/CD) pipelines, automating the deployment and testing of software applications with ease.

Enhancing Scalability with AWS Auto Scaling

Introduction to AWS Auto Scaling:

AWS Auto Scaling is a service provided by Amazon Web Services (AWS) that automatically adjusts the capacity of your resources to maintain steady, predictable performance at the lowest possible cost. It helps you manage and optimize the scaling of your applications, ensuring they can handle varying levels of traffic efficiently. With AWS Auto Scaling, you can set up scaling policies to automatically add or remove instances based on demand, eliminating the need for manual intervention.

Dynamic Scaling vs. Predictive Scaling:

Dynamic scaling involves adjusting resources in real-time based on current demand. AWS Auto Scaling dynamically adds or removes instances to match traffic patterns, ensuring optimal performance and cost-efficiency. Predictive scaling, on the other hand, uses machine learning algorithms to forecast future demand and scale resources preemptively. This allows for smoother scaling transitions and helps prevent over-provisioning or under-provisioning.

Configuration and Policies:

AWS Auto Scaling allows you to define scaling policies that dictate how your resources should scale based on various metrics such as CPU utilization, network traffic, or custom CloudWatch alarms. You can configure target tracking scaling policies, step scaling policies, or simple scaling policies depending on your specific requirements. Additionally, you can set minimum and maximum capacity limits to ensure that your resources scale within predefined boundaries.

Integration with AWS Services:

AWS Auto Scaling integrates seamlessly with various AWS services such as Amazon EC2 (Elastic Compute Cloud), Amazon ECS (Elastic Container Service), Amazon DynamoDB, and Amazon Aurora. This allows you to automatically scale compute resources, containers, or database instances based on workload demand. Integration with AWS Application Auto Scaling also enables scaling of custom or third-party applications running on EC2 instances or containers.

Best Practices for AWS Auto Scaling:

Some best practices for effectively using AWS Auto Scaling include:

- Utilizing multiple availability zones for increased fault tolerance and high availability.

- Monitoring and adjusting scaling policies regularly to ensure they align with changing workload patterns.

- Setting up appropriate CloudWatch alarms to trigger scaling actions based on key performance metrics.

- Implementing health checks to ensure that only healthy instances are added to your auto-scaling groups.

- Leveraging AWS services such as Elastic Load Balancing and Amazon Route 53 for distributing traffic across scalable resources.

Automating Serverless Deployments with AWS Lambda

Introduction to AWS Lambda:

AWS Lambda is a serverless compute service provided by Amazon Web Services (AWS) that lets you run code without provisioning or managing servers. With Lambda, you can upload your code and AWS takes care of everything required to run and scale your code with high availability. Lambda supports multiple programming languages including Python, Node.js, Java, and more. It enables you to execute code in response to events such as changes to data in Amazon S3 buckets, updates to DynamoDB tables, HTTP requests via API Gateway, and more.

Serverless Architecture Overview:

Serverless architecture is a cloud computing model where the cloud provider dynamically manages the allocation and provisioning of servers. It allows developers to focus on writing code without worrying about server management, scaling, or maintenance. In serverless architectures, applications are broken down into smaller functions, and each function is executed independently in response to events

Creating Lambda Functions:

Creating Lambda functions involves writing the code for your application logic and configuring the function in the AWS Management Console, AWS CLI, or AWS SDKs. You can choose from various programming languages supported by Lambda. Once created, Lambda functions can be triggered by various event sources such as HTTP requests, file uploads, database changes, scheduled events, and more.

Event Sources and Triggers:

Event sources are the entities that trigger the execution of Lambda functions. AWS Lambda supports a wide range of event sources including Amazon S3, Amazon DynamoDB, Amazon SNS, Amazon SQS, API Gateway, CloudWatch Events, and more. These event sources generate events that Lambda functions can respond to. Triggers are the configurations that connect event sources to Lambda functions, specifying which functions should be executed when certain events occur.

Monitoring and Troubleshooting Lambda Functions:

Monitoring Lambda functions involves tracking metrics such as invocation count, execution duration, error rate, and more using AWS CloudWatch. CloudWatch provides logs and metrics that help you monitor the performance and health of your Lambda functions. Additionally, AWS X-Ray can be used for tracing and debugging Lambda function invocations, helping to identify performance bottlenecks and troubleshoot issues in distributed serverless applications.

Orchestration in AWS Cloud

Orchestration in the AWS Cloud refers to the process of automating, coordinating, and managing various resources and services within the Amazon Web Services (AWS) ecosystem. It involves streamlining workflows, managing dependencies, handling errors, and ensuring scalability and performance to optimize the efficiency of cloud-based applications and infrastructure.

Introduction to Orchestration:

Orchestration plays a crucial role in modern cloud computing environments, where complex systems and applications often consist of numerous interconnected components. By orchestrating these components, organizations can automate repetitive tasks, reduce manual intervention, and improve overall agility and efficiency. In AWS, orchestration enables users to seamlessly deploy, configure, and manage resources such as virtual machines, databases, storage, networking, and more, across different AWS services.

AWS Orchestration Services Overview:

AWS provides a variety of services and tools to facilitate orchestration tasks. These include AWS Step Functions, AWS CloudFormation, AWS Elastic Beanstalk, AWS CodePipeline, AWS OpsWorks, and more. Each of these services serves specific purposes in orchestrating workflows, managing resources, and automating deployment pipelines. For instance, AWS Step Functions enable the creation of serverless workflows to coordinate multiple AWS services, while AWS CloudFormation allows for the provisioning and management of AWS resources using code templates.

Designing Orchestration Workflows:

Designing effective orchestration workflows involves defining the sequence of tasks, specifying the interactions between different components, and determining the logic for decision-making and branching. It often requires understanding the dependencies between various resources and services, as well as considering factors such as data flow, concurrency, and error handling. AWS offers a range of tools and services with features for designing and visualizing workflows, making it easier for users to create and manage complex orchestration processes.

Managing Dependencies and Error Handling:

In orchestration, managing dependencies between tasks or services is essential to ensure that workflows execute smoothly and efficiently. This includes handling dependencies related to data availability, service readiness, and task completion. Additionally, robust error handling mechanisms are crucial to detect and respond to failures or unexpected conditions during execution. AWS provides features such as retry logic, exception handling, and fault tolerance capabilities to help users manage dependencies and handle errors effectively in their orchestration workflows.

Scalability and Performance Considerations:

As applications and workloads scale in the cloud, orchestrating resources for optimal performance becomes increasingly important. Scalability considerations involve designing orchestration workflows that can adapt dynamically to changing demand, effectively utilizing resources, and distributing workloads efficiently across multiple instances or regions. Performance optimization focuses on reducing latency, improving throughput, and minimizing resource contention to deliver a seamless user experience. AWS offers services like auto-scaling, load balancing, caching, and content delivery networks (CDNs) to address scalability and performance challenges in orchestration. By carefully considering these factors, organizations can leverage the full potential of the AWS Cloud to build scalable, high-performance applications with efficient orchestration capabilities.

Advanced Techniques for AWS Automation and Orchestration

Continuous Integration/Continuous Deployment (CI/CD) Pipelines:

CI/CD pipelines are automated workflows used to streamline the process of delivering software updates. In AWS, tools like AWS CodePipeline, AWS CodeBuild, and AWS CodeDeploy are commonly used to set up CI/CD pipelines.

These pipelines automate the building, testing, and deployment of applications, ensuring that changes to the codebase are quickly and efficiently integrated into the production environment.

CI/CD pipelines help teams to achieve faster release cycles, improve collaboration, and maintain the reliability of their applications.

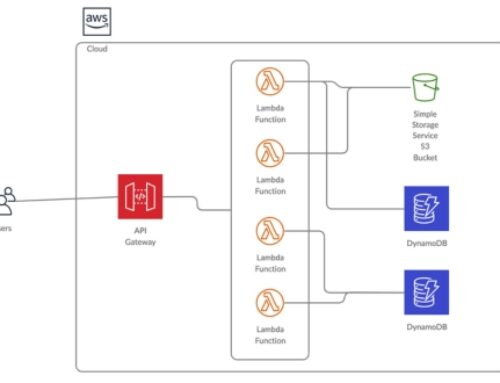

Serverless Orchestration with AWS Lambda:

AWS Lambda is a serverless computing service that allows you to run code without provisioning or managing servers.

Serverless orchestration involves coordinating the execution of multiple serverless functions to accomplish a larger task.

AWS Lambda can be used in conjunction with other AWS services like Amazon API Gateway, Amazon S3, Amazon DynamoDB, etc., to build complex, event-driven applications.

With Lambda, developers can focus on writing code without worrying about infrastructure management, scaling, or availability.

Data Pipeline Automation with AWS Glue:

AWS Glue is a fully managed extract, transform, and load (ETL) service that makes it easy to prepare and load data for analytics.

It automates the process of discovering, cataloging, and transforming data, making it available for analysis in Amazon Redshift, Amazon S3, or other analytics tools.

AWS Glue simplifies data pipeline development by providing a visual interface for creating and managing ETL jobs.

It supports various data sources and formats, including relational databases, data warehouses, and streaming data.

AI-driven Automation with AWS SageMaker:

AWS SageMaker is a fully managed machine learning service that enables developers and data scientists to build, train, and deploy machine learning models at scale.

SageMaker provides built-in algorithms, model training environments, and deployment options, reducing the complexity and time required to develop machine learning applications.

With SageMaker, you can automate model training, tuning, and deployment processes, making it easier to incorporate machine learning capabilities into your applications.

It also offers capabilities for data labeling, model monitoring, and experimentation, facilitating the entire machine-learning lifecycle.

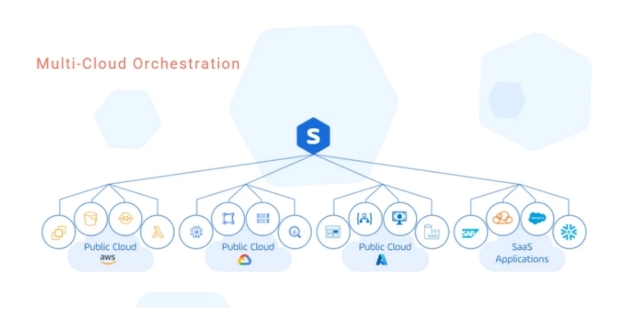

Multi-Cloud Orchestration Strategies:

Multi-cloud orchestration involves managing resources and workloads across multiple cloud providers, such as AWS, Azure, and Google Cloud Platform (GCP).

Organizations adopt multi-cloud strategies to mitigate vendor lock-in, increase redundancy, and leverage best-of-breed services from different providers.

AWS provides services like AWS Organizations and AWS Control Tower to help manage multiple AWS accounts and resources centrally.

Additionally, tools like AWS CloudFormation and AWS Service Catalog can be used to provision and manage resources consistently across different cloud environments.

Implementing multi-cloud orchestration requires careful planning, automation, and governance to ensure security, compliance, and cost optimization across the entire infrastructure.

Best Practices and Use Cases

Cost Optimization Strategies

Cost optimization strategies involve identifying and implementing measures to minimize expenses while maximizing value. This can be achieved through various means such as resource allocation, cloud service selection, and optimization of infrastructure usage.

One common approach is leveraging cloud computing services effectively. By utilizing cloud resources, organizations can scale their infrastructure according to demand, thereby avoiding unnecessary costs associated with maintaining on-premises hardware. Additionally, employing tools and techniques for monitoring resource usage can help identify areas of inefficiency and optimize spending.

Furthermore, adopting a pay-as-you-go model and utilizing reserved instances or spot instances can lead to significant cost savings. This allows organizations to pay only for the resources they use, rather than maintaining fixed infrastructure that may not always be fully utilized.

Implementing cost tagging and tracking mechanisms is also crucial for understanding where expenses are occurring and identifying opportunities for optimization. By categorizing spending by project, department, or application, organizations can allocate resources more effectively and make informed decisions about where to focus cost-saving efforts.

Security and Compliance Automation

Security and compliance automation involves streamlining processes and implementing tools to ensure that systems and data are protected against threats and adhere to regulatory requirements.

Automating security measures can help ensure consistency and reduce the risk of human error. This can include tasks such as vulnerability scanning, patch management, and configuration management. By automating these processes, organizations can more effectively identify and remediate security vulnerabilities before they can be exploited.

Compliance automation involves implementing controls and processes to ensure that systems and data comply with relevant regulations and standards, such as GDPR, HIPAA, or PCI DSS. This can include automating audit processes, generating compliance reports, and enforcing access controls.

Furthermore, integrating security and compliance into the software development lifecycle through practices such as DevSecOps can help ensure that security measures are built into applications from the outset, rather than being bolted on as an afterthought.

Overall, security and compliance automation are essential for protecting sensitive data, mitigating risks, and maintaining trust with customers and stakeholders.

DevOps and Agile Practices

DevOps and Agile practices focus on improving collaboration, efficiency, and agility within software development and IT operations teams.

DevOps emphasizes the integration and automation of processes between software development and IT operations teams, with the goal of accelerating software delivery and improving the quality of releases. This involves practices such as continuous integration, continuous delivery, and infrastructure as code, which enable teams to deploy changes more frequently and reliably.

Agile methodologies emphasize iterative development, frequent feedback, and adaptive planning. By breaking down projects into smaller, manageable tasks and delivering working software incrementally, Agile teams can respond more effectively to changing requirements and customer needs.

By adopting DevOps and Agile practices, organizations can achieve faster time-to-market, increased collaboration between teams, and improved responsiveness to customer feedback. This ultimately leads to higher-quality software, greater customer satisfaction, and a more competitive edge in the marketplace.

Conclusion

In conclusion, mastering AWS cloud automation and orchestration is essential for organizations looking to stay competitive and maximize the value of their cloud investments. By automating routine tasks, orchestrating complex workflows, and embracing best practices, businesses can achieve greater agility, scalability, and efficiency in the cloud.

Benefits include increased efficiency, reduced human error, faster deployment of resources, cost savings through optimization, improved scalability, and enhanced security through consistent configurations.

AWS offers various tools and services such as AWS CloudFormation, AWS Lambda, AWS Step Functions, AWS Systems Manager, AWS OpsWorks, AWS CodePipeline, AWS CodeDeploy, and more for automation and orchestration.

AWS Cloud Orchestration involves managing and coordinating multiple automated tasks or services within the AWS cloud environment to achieve a specific goal or workflow.

AWS CloudFormation is a service that allows you to define your infrastructure as code using JSON or YAML templates. These templates can then be used to provision and manage AWS resources in a repeatable and automated manner.

AWS Lambda is a serverless computing service that allows you to run code in response to events without provisioning or managing servers. It enables you to execute code in a scalable and cost-effective manner.