Understanding AWS API Gateway

AWS API Gateway is a fully managed service provided by Amazon Web Services (AWS) that enables developers to create, publish, maintain, monitor, and secure APIs at any scale. It acts as a gateway for managing HTTP(S) requests and responses between client applications and backend services running on AWS or elsewhere.

Overview of AWS API Gateway:

API Creation: Developers can create RESTful APIs or WebSocket APIs using API Gateway. It provides a user-friendly interface for defining resources, methods, request and response models, and integrations with backend services.

API Deployment: Once an API is created, developers can deploy it to different stages (e.g., development, testing, production) for testing and production use. Each stage represents a snapshot of the API configuration and endpoints at a specific point in time.

API Management: API Gateway allows developers to manage APIs effectively by providing features such as versioning, documentation generation, throttling, caching, and access control using AWS Identity and Access Management (IAM) policies.

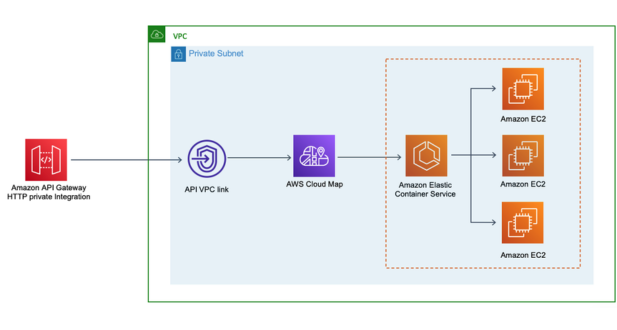

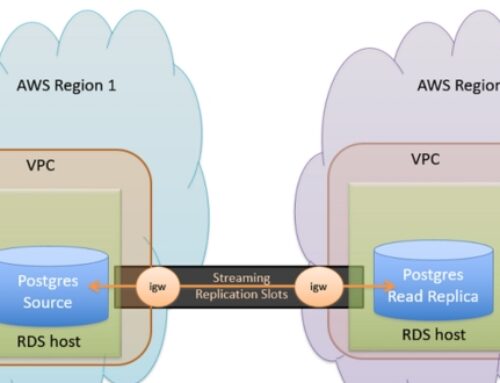

Integration with AWS Services: API Gateway seamlessly integrates with various AWS services such as AWS Lambda, AWS Elastic Beanstalk, Amazon EC2, AWS Step Functions, and AWS S3, enabling developers to build serverless architectures or connect to existing backend services.

Monitoring and Logging: Developers can monitor API usage, performance, and errors using Amazon CloudWatch metrics and logs generated by API Gateway. This helps in identifying issues, optimizing performance, and scaling resources as needed.

Security: API Gateway provides multiple security mechanisms to protect APIs, including AWS IAM authentication, resource policies, SSL/TLS encryption, and integration with AWS WAF (Web Application Firewall) for filtering and blocking malicious requests.

Key Features and Benefits:

- Scalability: API Gateway automatically scales to handle any amount of traffic, allowing developers to focus on building APIs without worrying about infrastructure management.

- Cost-effectiveness: With a pay-as-you-go pricing model, developers only pay for the API requests they receive and the data transferred out, making it cost-effective for both small-scale and large-scale applications.

- Ease of Use: API Gateway offers a user-friendly interface and comprehensive documentation, making it easy for developers to design, deploy, and manage APIs with minimal effort.

- Flexibility: Developers can choose from various integration options and customization features to tailor APIs according to their specific use cases and requirements.

- Reliability: API Gateway is designed for high availability and fault tolerance, with built-in redundancies and automated failover mechanisms to ensure reliable API performance.

- Security: API Gateway provides robust security features to protect APIs from unauthorized access, data breaches, and other security threats, enabling developers to build secure and compliant applications.

Pricing Model:

- Number of API Calls: Developers are charged based on the number of API requests received by API Gateway, including both incoming requests and outgoing responses.

- Data Transfer Out: Additional charges apply for data transfer out of API Gateway to the internet or other AWS regions.

- Cache Usage: API Gateway offers caching features to improve performance and reduce latency. There may be additional charges for caching based on the cache size and usage patterns.

- WebSocket Connections: For WebSocket APIs, developers are charged based on the number of connections established and data transferred over WebSocket connections.

- Request Payload: Charges may vary based on the size and complexity of request and response payloads processed by API Gateway.

Getting Started with AWS API Gateway

Setting up an AWS account:

- If you don’t already have an AWS account, you’ll need to sign up for one. You can do this by visiting the AWS website and following the prompts to create a new account.

- During the signup process, you’ll need to provide some basic information, such as your email address, contact information, and payment details.

- Once your account is set up, you’ll have access to the AWS Management Console, where you can manage all of your AWS services and resources.

Accessing the AWS Management Console:

- To access the AWS Management Console, go to the AWS website and sign in with the credentials you used to create your account.

- Once logged in, you’ll be able to see a dashboard with various AWS services and resources available to you.

- You can navigate through the console using the menu on the left-hand side to find the AWS API Gateway service.

Creating your first API using AWS API Gateway:

- In the AWS Management Console, navigate to the API Gateway service.

- Click on “Create API” to start creating your first API.

- You’ll be prompted to choose between creating a new API or importing an existing one. For this example, select “New API.”

- Next, you’ll need to define the details of your API, such as the name and description. You can also choose the type of endpoint you want to create (HTTP or WebSocket).

- Once you’ve defined the basic details, click “Create API” to proceed.

- Now, you can start defining the resources and methods for your API. Resources represent the logical paths of your API, and methods define the actions that can be performed on those resources (e.g., GET, POST, PUT, DELETE).

- After defining your resources and methods, you can configure integrations with backend services, set up authorization and authentication, and define other settings such as request and response mappings.

- Once you’re satisfied with the configuration, deploy your API to make it accessible to clients.

API Design and Configuration

- Designing RESTful APIs: REST (Representational State Transfer) is an architectural style for designing networked applications. RESTful APIs are designed around resources, which are any identifiable entity that can be manipulated via the API. When designing RESTful APIs, you need to consider how to structure these resources in a logical and intuitive way.

- Defining resources, methods, and models: Resources are the key entities that the API exposes. Each resource should have a unique identifier (URI) and represent a logical concept within the application. Methods (or HTTP verbs) such as GET, POST, PUT, DELETE are used to perform operations on these resources. Additionally, models refer to the data structures that represent the resource’s state, including attributes and relationships.

- Configuring request and response mappings: Once you’ve designed your API, you need to configure how incoming requests are mapped to specific resources and methods, and how the responses are structured. This involves defining routes or endpoints that correspond to different resources and specifying the appropriate HTTP methods for each endpoint. Additionally, you’ll need to determine the format of the data exchanged between the client and the server, typically using JSON or XML for the payload.

Integration with AWS Services

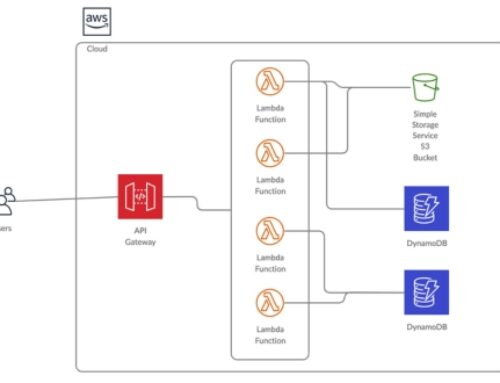

Integration with AWS Lambda:

- AWS Lambda is a serverless computing service that allows you to run code in response to events without provisioning or managing servers. Integration with Lambda means that your application can trigger Lambda functions to execute certain tasks or processes.

- For example, you might integrate your application with AWS Lambda to process incoming data from a web form, perform image resizing, or handle backend processing tasks based on specific events.

Integration with AWS DynamoDB:

- AWS DynamoDB is a fully managed NoSQL database service that provides fast and predictable performance with seamless scalability. Integration with DynamoDB involves storing and retrieving data from DynamoDB tables within your application.

- Your application might use DynamoDB to store user profiles, session data, or any other structured data. Integration with DynamoDB enables your application to perform CRUD (Create, Read, Update, Delete) operations on DynamoDB tables as needed.

Integration with other AWS services:

- Besides Lambda and DynamoDB, AWS offers a vast array of services covering storage, compute, databases, machine learning, analytics, networking, security, and more. Integration with other AWS services means leveraging these additional services within your application to enhance functionality, scalability, and efficiency.

- Examples of other AWS services that your application might integrate with include Amazon S3 for object storage, Amazon SQS for message queuing, Amazon SNS for notifications, Amazon ECS for container orchestration, Amazon RDS for relational databases, Amazon Comprehend for natural language processing, and many others.

Authentication and Authorization

API Key Authentication:

- API key authentication involves issuing a unique identifier (the API key) to clients accessing an API. This key is typically included in the request headers or query parameters.

- The server validates the API key against a predefined list of keys stored securely. If the key is valid, the client is granted access to the requested resources.

- API keys are simple to implement and are often used for public APIs or for accessing non-sensitive data. However, they lack the ability to identify individual users and can be more prone to misuse if not properly secured.

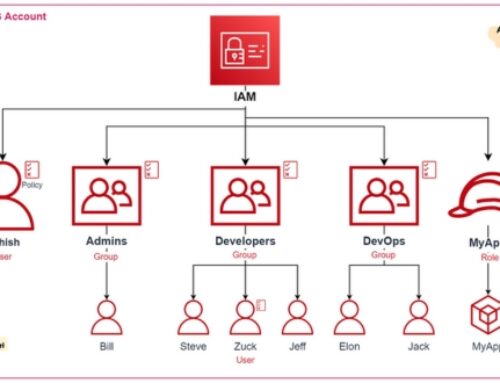

IAM Roles and Policies:

- IAM (Identity and Access Management) roles and policies are commonly used in cloud environments, such as AWS (Amazon Web Services), Azure, or Google Cloud Platform.

- IAM roles define a set of permissions that specify what actions a user, group, or service can perform on specific resources. These roles are associated with users or resources.

- Policies are JSON documents that define the permissions of a role or user. They specify which actions are allowed or denied on which resources.

- By properly configuring IAM roles and policies, organizations can enforce the principle of least privilege, ensuring that users have only the permissions necessary to perform their tasks.

OAuth 2.0 Authentication:

- OAuth 2.0 is an industry-standard protocol for authorization, not authentication. However, it’s often used in conjunction with authentication mechanisms to enable secure access to resources on behalf of a user.

- OAuth 2.0 allows a third-party application to obtain limited access to an HTTP service, either on behalf of a resource owner (user) or by allowing the third-party application to obtain access on its own behalf.

- OAuth 2.0 involves multiple actors: the resource owner, the client (third-party application), the authorization server, and the resource server. It utilizes access tokens to grant access to protected resources.

- OAuth 2.0 is commonly used in scenarios where users want to grant access to their data hosted on one service to another service without sharing their credentials.

API Gateway Deployment Strategies

Deploying APIs to Multiple Stages:

- This strategy involves deploying your APIs to different stages, such as development, testing, staging, and production. Each stage serves a specific purpose in the software development lifecycle.

- Development stage: Used by developers for coding, testing, and debugging.

- Testing stage: Where QA engineers perform comprehensive testing to identify and fix bugs.

- Staging stage: Resembles the production environment closely and serves as a final checkpoint before deploying changes to production.

- Production stage: The live environment where the API serves actual users.

- Deploying APIs to multiple stages allows for thorough testing and validation before changes are promoted to production, reducing the risk of introducing bugs or issues that may impact end-users.

Canary Deployments:

- Canary deployments involve gradually rolling out changes to a subset of users or traffic before deploying them to the entire user base.

- In the context of API Gateway, a canary deployment might involve routing a small percentage of incoming API requests to a new version of the API while the majority of traffic continues to be served by the existing version.

- This approach allows for monitoring the performance and behavior of the new version in a real-world scenario, enabling early detection of potential issues or regressions.

- If the canary deployment is successful and the new version performs as expected, the rollout can be expanded to include the entire user base. If issues arise, the deployment can be rolled back with minimal impact.

Blue/Green Deployments:

- Blue/green deployments involve maintaining two identical production environments: one active (blue) and the other idle (green).

- The active environment serves live traffic while the idle environment remains dormant.

- When deploying changes, the new version of the API is deployed to the idle environment (green) and thoroughly tested to ensure it functions correctly.

- Once validated, traffic is switched from the active environment (blue) to the newly deployed version in the idle environment (green), making it the new active environment.

- This approach minimizes downtime and risk because the switch between environments is instantaneous, and the previous version remains intact in case of issues, allowing for quick rollback if necessary.

Monitoring and Logging

- Monitoring API usage and performance: This involves tracking various metrics related to how your API is being utilized and how well it is performing. Metrics can include things like request rates, response times, error rates, and resource utilization (CPU, memory, network). By monitoring these metrics, you can gain insights into the health and efficiency of your API, identify potential bottlenecks or issues, and make informed decisions about scaling or optimizing your infrastructure.

- Setting up CloudWatch alarms: Amazon CloudWatch is a monitoring and management service provided by Amazon Web Services (AWS). CloudWatch allows you to set up alarms based on predefined thresholds for various metrics. For example, you might set up an alarm to notify you if the error rate of your API exceeds a certain threshold or if CPU utilization spikes above a certain level. These alarms can trigger automated responses or notifications to alert you to potential issues in real-time, allowing you to proactively address them before they impact users.

- Logging API requests and responses: Logging involves recording detailed information about each request made to your API and the corresponding response. This can include things like timestamps, request parameters, headers, response codes, and payloads. By logging this information, you can track the flow of requests through your system, troubleshoot issues when they arise, and analyze usage patterns over time. Logging can also be invaluable for auditing and compliance purposes, as it provides a detailed record of all API activity.

API Gateway Security Best Practices

Securing APIs with SSL/TLS:

SSL/TLS (Secure Sockets Layer/Transport Layer Security) protocols are fundamental in securing communications between clients and servers over the internet. By encrypting the data exchanged between them, SSL/TLS ensures confidentiality and integrity, preventing eavesdropping and tampering.

- TLS Configuration: Configure your API Gateway to enforce the use of TLS for all incoming requests. This ensures that data transmitted between clients and the API Gateway is encrypted.

- SSL Certificate Management: Ensure that your SSL/TLS certificates are properly managed, renewed before expiration, and issued by trusted Certificate Authorities (CAs). Employ certificate pinning to enhance security by associating specific certificates with your API Gateway.

- Strong Cipher Suites: Utilize strong cipher suites to secure the TLS connections. Disable outdated and vulnerable protocols such as SSLv2 and SSLv3, and prioritize the usage of modern cipher suites like AES (Advanced Encryption Standard) and elliptic curve cryptography (ECC).

Implementing Input Validation:

Input validation is crucial for preventing a wide range of security vulnerabilities such as injection attacks, buffer overflows, and cross-site scripting (XSS).

- Data Validation Filters: Integrate input validation filters within your API Gateway to inspect incoming requests for malicious or malformed input. Implement filters to validate input parameters, headers, and payloads according to predefined rules and constraints.

- Sanitization Techniques: Employ sanitization techniques to cleanse input data of potentially harmful characters or payloads. Utilize whitelist validation to only allow known, safe inputs and blacklist validation to reject known malicious inputs.

- Regular Expression (RegEx) Patterns: Leverage regular expressions to define patterns for valid input formats. Use RegEx to validate inputs such as email addresses, URLs, and other structured data formats.

Protecting Against Common Security Threats:

- Injection Attacks: Protect against SQL injection, NoSQL injection, and command injection by employing parameterized queries, prepared statements, and input validation.

- Cross-Site Scripting (XSS): Implement output encoding to prevent XSS attacks. Encode user-generated content before rendering it in HTML, JavaScript, or other contexts prone to XSS vulnerabilities.

- Cross-Site Request Forgery (CSRF): Mitigate CSRF attacks by incorporating anti-CSRF tokens into your API Gateway’s authentication and session management mechanisms.

- Denial of Service (DoS) Attacks: Implement rate limiting, throttling, and request validation to mitigate the risk of DoS attacks targeting your API endpoints.

- API Key Management: Securely manage and rotate API keys to prevent unauthorized access and misuse of your APIs. Utilize HMAC (Hash-based Message Authentication Code) signatures for API key verification and integrity protection.

Scaling and Performance Optimization

Horizontal Scaling:

- Horizontal scaling, also known as scale-out, involves adding more machines or instances to your system to distribute the workload.

- This approach typically involves deploying multiple copies of the application across different servers or virtual machines.

- Load balancers are often used to distribute incoming requests among these instances, ensuring even distribution of workload.

- Horizontal scaling allows for handling increased traffic and provides better fault tolerance since the failure of one instance doesn’t bring down the entire system.

Caching Strategies:

- Caching involves storing frequently accessed data in a fast-access storage layer to reduce the need to fetch the same data from slower, persistent storage.

- Common caching strategies include in-memory caching, where data is stored in RAM for ultra-fast access, and distributed caching, where cached data is shared across multiple nodes.

- Caching can be applied at various levels of the application stack, including database query caching, object caching, and full-page caching.

- By effectively implementing caching strategies, you can significantly reduce the load on backend resources and improve overall system performance.

Fine-Tuning Performance Parameters:

- Fine-tuning involves optimizing various parameters and configurations within your system to achieve the best possible performance.

- This includes tuning database settings such as buffer sizes, connection pool settings, and query optimization.

- It also involves optimizing web server configurations, such as adjusting thread pool sizes, connection timeouts, and request handling mechanisms.

- Monitoring and profiling tools are often used to identify performance bottlenecks and guide the fine-tuning process.

- Fine-tuning is an ongoing process and requires continuous monitoring and adjustment to adapt to changing workloads and system conditions.

Conclusion:

AWS API Gateway is a versatile and feature-rich service that simplifies the process of building, deploying, and managing APIs in the AWS cloud. By understanding its capabilities, implementing best practices, and leveraging advanced features, developers can unlock the full potential of AWS API Gateway to build scalable, secure, and high-performance applications. This comprehensive guide has equipped you with the knowledge and tools needed to embark on your journey of mastering AWS API Gateway and revolutionizing your application development process. Start building your next-generation APIs with confidence and accelerate your path to digital innovation.

Benefits include reduced development effort, scalability, security features (like authorization and authentication), monitoring, and logging capabilities.

API Gateway provides various security mechanisms like IAM roles and policies, API keys, Lambda authorizers, and OAuth 2.0.

Yes, API Gateway integrates seamlessly with various AWS services like AWS Lambda, AWS DynamoDB, AWS S3, AWS SNS, and more.

REST APIs are used for traditional HTTP requests, while WebSocket APIs enable real-time two-way communication between clients and servers over a long-lived connection.

API Gateway provides built-in monitoring and logging features through Amazon CloudWatch, which allows you to track API usage, performance, and errors.