Introduction:

Google Cloud Platform (GCP) offers a robust and scalable solution for managing Kubernetes clusters, providing a reliable foundation for deploying, managing, and scaling containerized applications. Kubernetes, an open-source container orchestration platform, has become the de facto standard for automating the deployment, scaling, and management of containerized applications. In this comprehensive guide, we will explore the various aspects of creating and managing Kubernetes clusters on GCP.

Understanding Kubernetes and GCP

What is Kubernetes?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Containers are lightweight, portable units that encapsulate an application and its dependencies, ensuring consistency across various environments. Kubernetes simplifies the complexities of containerized application management by providing tools for automating tasks like load balancing, scaling, and rolling updates. It enables efficient utilization of resources, improves application resilience, and facilitates the development of cloud-native applications.

Why GCP for Kubernetes?

Google Cloud Platform (GCP) is a comprehensive cloud computing platform that offers a robust infrastructure for deploying and managing Kubernetes clusters. GCP provides a set of services and tools designed to enhance the Kubernetes experience, making it an ideal choice for organizations seeking a reliable and scalable cloud environment. GCP’s integration with Kubernetes is seamless, and its features complement the requirements of containerized applications. With GCP, users can leverage a range of services to optimize their Kubernetes deployments, including managed Kubernetes services like Google Kubernetes Engine (GKE).

GCP Kubernetes Services

Google Kubernetes Engine (GKE)

Google Kubernetes Engine (GKE) is a fully managed Kubernetes service on GCP, simplifying the deployment, management, and scaling of containerized applications. GKE automates operational tasks, such as cluster upgrades and node management, allowing developers to focus on building and deploying applications. It provides a reliable and scalable infrastructure, ensuring high availability and performance. GKE integrates with other GCP services, such as Google Cloud Monitoring and Logging, to streamline observability and troubleshooting.

Key Features

Key features of GKE include automatic scaling, multi-cluster support, and seamless integration with Google Cloud’s security and networking capabilities. GKE also supports the deployment of applications using standard Kubernetes manifests, enabling portability across different Kubernetes environments. Google’s deep expertise in managing large-scale containerized workloads is reflected in GKE, making it a trusted choice for enterprises seeking a production-ready Kubernetes platform.

Benefits of GKE

The benefits of GKE include simplified cluster management, automatic scaling based on workload demands, and enhanced security features. GKE ensures high availability with automated upgrades and node repair. It provides a consistent and secure environment for deploying applications, allowing organizations to accelerate their development and innovation processes.

Kubernetes on Compute Engine

For users who require more control over the Kubernetes cluster infrastructure, GCP offers the option to deploy Kubernetes on Compute Engine. This approach allows for custom configurations and optimizations tailored to specific application requirements. While it requires more manual management compared to GKE, it provides greater flexibility and control over the underlying infrastructure.

Anthos: Extending Kubernetes Across Clouds

Anthos is a hybrid and multi-cloud platform from Google Cloud that extends Kubernetes across different environments, including on-premises data centers and other cloud providers. Anthos provides a consistent and unified platform for managing applications across diverse landscapes, simplifying operations and enabling organizations to embrace a multi-cloud strategy. With Anthos, users can deploy, manage, and scale containerized applications seamlessly, regardless of the underlying infrastructure, promoting agility and reducing vendor lock-in concerns.

Setting Up Your GCP Environment

Creating a GCP Account

Setting up a Google Cloud Platform (GCP) account is the initial step in leveraging its powerful cloud services. Users must navigate through the account creation process, providing necessary information and agreeing to terms and conditions. This step ensures access to a wide array of GCP resources and functionalities.

Setting Up GCP Billing

Once the GCP account is established, configuring billing settings becomes crucial. Users need to associate a billing account with their GCP project to enable the usage of paid services. This involves providing payment information, setting budgets, and managing access permissions. Proper billing setup is vital for tracking resource consumption and avoiding unforeseen charges.

Installing and Configuring the Google Cloud SDK

The Google Cloud SDK is a powerful set of tools that facilitates interaction with GCP services through the command line. Installation and configuration involve downloading the SDK, setting authentication parameters, and configuring project settings. This step is essential for seamless management and deployment of resources on GCP.

Creating a Kubernetes Cluster on GKE

Using GCP Console

Creating a Kubernetes cluster on Google Kubernetes Engine (GKE) can be done via the user-friendly GCP Console. This graphical interface simplifies the process, allowing users to specify cluster details, such as the number of nodes and machine types, through intuitive forms. It provides a visual representation of the cluster setup, making it accessible for users with varying levels of technical expertise.

Command-Line Interface (gcloud)

For users preferring a more hands-on approach, the GCP Command-Line Interface (CLI), specifically the ‘gcloud’ command, offers a powerful alternative. This method allows users to create and manage GKE clusters using commands in the terminal. It provides greater flexibility and control over the cluster configuration, making it suitable for users comfortable with command-line interactions.

Advanced Configuration Options

Node Pools

Node pools in GKE enable users to customize the underlying virtual machines within a cluster. This advanced configuration option allows for the creation of node groups with distinct characteristics, such as different machine types or specialized resources. It provides flexibility in optimizing the cluster for diverse workloads.

Node Auto-provisioning

Node auto-provisioning automates the process of scaling node pools based on resource demands. This dynamic feature adjusts the number of nodes in response to workload changes, ensuring efficient resource utilization and cost-effectiveness.

Cluster Autoscaler

The cluster autoscaler is a key feature that automatically adjusts the size of the cluster based on resource usage. It adds or removes nodes to maintain optimal performance and minimize costs. This intelligent scaling mechanism is crucial for handling varying workloads and ensuring resource efficiency in a GKE environment.

Deploying Applications on GKE

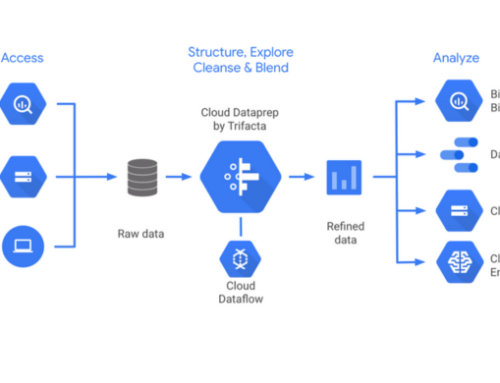

Deploying applications on Google Kubernetes Engine (GKE) involves several key steps to ensure a smooth and efficient process. In this context, containerization is a fundamental practice that enhances the portability and scalability of applications.

Containerizing Applications

- Building Docker Images

Containerizing applications begins with building Docker images. Docker provides a standardized format to package applications and their dependencies, ensuring consistency across different environments. Developers create Dockerfiles, which contain instructions for building images. These files specify the base image, application code, dependencies, and configuration settings. The Docker build process compiles these instructions into a lightweight and portable container image.

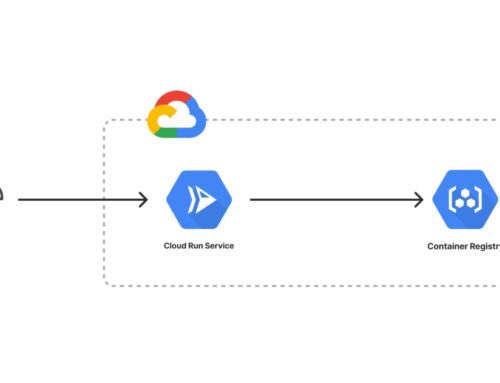

- Storing Images in Container Registry

Once Docker images are created, they need to be stored in a centralized repository for easy retrieval and distribution. Google Cloud provides Container Registry, a fully-managed Docker image storage service. Container Registry allows developers to securely store and manage Docker images, making it an integral part of the containerization process. Images stored in Container Registry can be easily accessed by GKE clusters during deployment.

Deploying Pods and Services

- YAML Manifests

Deploying applications on GKE involves defining the desired state of the system using YAML manifests. These manifests describe the configuration of Kubernetes resources, including pods and services. Pods are the smallest deployable units in Kubernetes, encapsulating one or more containers. Services, on the other hand, enable communication and load balancing between pods. YAML manifests specify details such as container images, resource requirements, and networking settings.

In the context of GKE, YAML manifests serve as a declarative blueprint for the desired application architecture. Developers define the structure and relationships of pods and services, and Kubernetes ensures that the actual state matches this declared state. This declarative approach simplifies application deployment and management, allowing for easy scaling, updating, and rollback of applications running on GKE.

Deploying applications on GKE involves containerizing applications through the creation and storage of Docker images. These images are stored in the Container Registry for accessibility. The deployment process is then orchestrated using YAML manifests, specifying the desired state of the system in terms of pods and services. This structured approach to deployment ensures consistency, scalability, and ease of management in a Kubernetes environment.

Managing and Scaling Kubernetes Clusters

Managing and Scaling Kubernetes Clusters is a crucial aspect of deploying and maintaining containerized applications efficiently. This involves various tasks such as monitoring, logging, autoscaling workloads, and handling updates and rollbacks.

Monitoring and Logging:

Effective monitoring and logging are essential for gaining insights into the performance and health of a Kubernetes cluster. In this context, Stackdriver Integration is a powerful tool that enables comprehensive monitoring, logging, and diagnostics. Stackdriver provides real-time visibility into the cluster’s metrics, logs, and traces, allowing operators to proactively identify and address issues.

Additionally, the integration of Prometheus and Grafana enhances the monitoring capabilities of Kubernetes clusters. Prometheus, an open-source monitoring and alerting toolkit, collects metrics from configured targets. Grafana, a visualization and analytics platform, complements Prometheus by providing a user-friendly interface for creating dashboards and alerts based on the collected metrics. Together, these tools offer a robust solution for monitoring the cluster’s performance and ensuring its reliability.

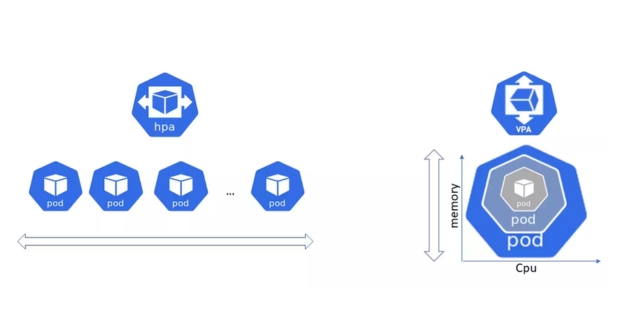

Autoscaling Workloads:

Autoscaling is a fundamental feature for efficiently managing resources in a Kubernetes cluster. It involves dynamically adjusting the number of running instances of a workload based on the current demand. This capability ensures optimal resource utilization and responsiveness to varying workloads. Kubernetes supports Horizontal Pod Autoscaling (HPA), which automatically adjusts the number of pods in a deployment or replica set based on observed metrics.

By leveraging autoscaling, operators can optimize resource allocation, reduce costs, and improve the overall performance of applications running on Kubernetes. This dynamic scaling approach is particularly beneficial for applications with varying traffic patterns throughout the day or in response to specific events.

Updating and Rolling Back Deployments:

Updating and rolling back deployments are critical processes for ensuring the stability and reliability of applications running on a Kubernetes cluster. Kubernetes facilitates seamless updates by allowing the deployment of new versions of applications without downtime. This is achieved through strategies like rolling updates, which gradually replace existing instances with new ones.

In the event of issues arising from a new deployment, the ability to roll back to a previous version is crucial. Kubernetes provides a straightforward rollback mechanism that enables operators to revert to a previous known-good state quickly. This rollback capability ensures that any unforeseen issues can be addressed promptly, minimizing the impact on users and maintaining the overall health of the application.

Effective management and scaling of Kubernetes clusters involve a comprehensive approach to monitoring, logging, autoscaling, and deployment updates. These practices contribute to the resilience, performance, and reliability of containerized applications in a dynamic and ever-changing environment.

Security Best Practices

Identity and Access Management (IAM)

Identity and Access Management (IAM) is a critical component of any secure cloud infrastructure. IAM ensures that only authorized users and services have access to resources and data. It involves defining and managing roles, permissions, and policies to control who can perform certain actions within a Google Cloud Platform (GCP) environment. By adhering to IAM best practices, organizations can minimize the risk of unauthorized access and potential security breaches.

Network Policies

Network policies play a vital role in securing a GCP environment by controlling the flow of traffic between different resources. These policies define how different services and workloads communicate within the network. Properly configured network policies help prevent unauthorized access, limit exposure to potential threats, and enhance the overall security posture. Adhering to best practices for network policies involves understanding the principle of least privilege and implementing segmentation to isolate sensitive workloads.

Securing Container Images

In a containerized environment, securing container images is crucial to prevent vulnerabilities and ensure the integrity of applications. This involves regularly updating and patching images, scanning for security vulnerabilities, and leveraging container security tools. Following best practices for securing container images helps mitigate risks associated with outdated software components and potential exploits, contributing to a more robust and resilient system.

GKE Autopilot: A Fully Managed Option

Google Kubernetes Engine (GKE) Autopilot is a fully managed option that automates many aspects of cluster management, reducing the operational burden on users. Utilizing GKE Autopilot can enhance security by automating updates, patches, and node scaling, ensuring that the underlying infrastructure is always up-to-date and secure. This managed service approach allows organizations to focus more on application development and less on the intricacies of cluster management, leading to a more secure and efficiently operated environment.

Integrating with Other GCP Services

Cloud Storage

Cloud Storage is a scalable and secure object storage service in GCP. When integrating with Cloud Storage, best practices include configuring appropriate access controls, encryption, and versioning. Properly managing permissions and regularly auditing access to storage buckets helps prevent unauthorized data access and ensures data integrity.

Cloud SQL

Cloud SQL provides a fully managed relational database service. Integrating with Cloud SQL involves securing database connections, implementing role-based access control, and enabling encryption at rest and in transit. Following these best practices helps safeguard sensitive data stored in databases and ensures compliance with security standards.

Cloud Pub/Sub

Cloud Pub/Sub is a messaging service that enables communication between independent applications. Integrating with Cloud Pub/Sub involves setting up secure topics and subscriptions, implementing authentication and authorization mechanisms, and monitoring for suspicious activities. Adhering to these best practices ensures the confidentiality and integrity of messages exchanged between services.

Cloud CDN

Cloud CDN enhances the performance and security of web applications by caching content at globally distributed edge locations. Integrating with Cloud CDN involves configuring caching policies, optimizing content delivery, and implementing security measures such as HTTPS. By following best practices for Cloud CDN, organizations can improve the user experience while protecting against common web security threats.

Troubleshooting and Debugging

GCP Diagnostics

In the realm of troubleshooting and debugging within the Google Cloud Platform (GCP) ecosystem, diagnostics play a crucial role in identifying and resolving issues. GCP Diagnostics encompasses a comprehensive set of tools and techniques designed to analyze and understand the health and performance of various services hosted on the platform. This may involve examining logs, monitoring metrics, and utilizing specialized diagnostic tools provided by GCP. By delving into the diagnostics aspect, users gain insights into system behavior, pinpoint potential bottlenecks, and streamline their troubleshooting efforts.

Kubernetes Debugging Tools

Kubernetes, being a powerful container orchestration platform, demands a specific set of debugging tools to ensure smooth operation and rapid issue resolution. This section explores the diverse array of Kubernetes debugging tools available for users. From command-line utilities like kubectl to more advanced tools like kube-diff and kube-state-metrics, each serves a unique purpose in the debugging process. Understanding how to effectively leverage these tools allows administrators and developers to navigate the complexities of containerized environments, troubleshoot issues, and optimize the performance of their Kubernetes clusters.

Common Issues and Solutions

No system is without its challenges, and this section addresses the common stumbling blocks that users might encounter within the GCP and Kubernetes environments. By highlighting prevalent issues, such as network misconfigurations, resource constraints, or application-specific problems, users can preemptively equip themselves with knowledge to tackle these challenges head-on. Moreover, the section provides practical solutions and best practices to mitigate these issues. This proactive approach empowers users to not only troubleshoot but also implement preventative measures, fostering a more resilient and reliable system.

Conclusion:

Managing Kubernetes clusters on GCP provides a seamless and efficient environment for deploying containerized applications at scale. From the initial setup of your GCP environment to deploying and managing applications on GKE, this guide covers essential aspects of Kubernetes cluster management. As the landscape of cloud-native technologies evolves, GCP continues to be at the forefront, offering innovative solutions for enterprises seeking a reliable and scalable platform for their containerized workloads.