Introduction: Why Choose AWS for Data Analytics?

Ditch the Data Dilemma: Why AWS is the Cloud-Based Analytics Hero You Need

Do you feel like your data is buried in a labyrinth of servers, gathering dust and offering little value? Are you tired of the hefty upfront costs and rigid infrastructure of traditional data analytics solutions? Well, fret no more! Enter the cloud, and with it, the mighty AWS, your one-stop shop for unleashing the power of your data.

But why AWS, you ask? Let’s break it down:

- Ditch the Infrastructure Headaches:

Imagine a world where you don’t have to worry about physical servers, their maintenance, upgrades, or the space they take up. AWS offers a serverless paradise, where you focus on your data and leave the infrastructure to the cloud. No more procurement headaches, patch cycles, or scaling nightmares. Just pure, unadulterated data freedom.

- Scale with the Elasticity of a Superhero:

Need to handle a sudden surge in data traffic? No problem! AWS scales seamlessly with your needs, offering elastic resources that adapt to your workload like a chameleon. No more over-provisioning or under-resourcing. Just the right amount of power, always available, always ready to crunch your data.

- Pay Only for the Data Party You Throw:

Unlike traditional solutions that charge hefty upfront fees, AWS operates on a pay-as-you-go model. You only pay for the resources you use, meaning you’re not stuck with an expensive bill for unused servers. This flexibility makes AWS a budget-friendly option for businesses of all sizes, letting you invest more in your data insights and less in infrastructure.

- Unleash the Hidden Gems in Your Data:

Your data is a treasure trove waiting to be discovered. AWS empowers you to mine actionable insights from diverse sources, whether it’s structured data from your CRM, unstructured data from social media, or even real-time data from IoT devices. With AWS, no data is left behind, and every nugget of information can be transformed into valuable knowledge.

- Drive Data-Driven Decisions Across Your Organization:

Data is useless if it’s locked away in silos. AWS helps you democratize data by making it accessible and understandable across your entire organization. Imagine marketing teams using customer insights to personalize campaigns, product teams using data to improve user experience, and executives making strategic decisions based on real-time analytics. Everyone becomes a data-driven hero, empowered to make informed choices.

- Optimize Processes and Make Your Customers Smile:

Data is the key to unlocking operational efficiency and customer satisfaction. AWS lets you analyze your processes, identify bottlenecks, and optimize them for maximum performance. Want to reduce customer churn? Analyze customer behavior and tailor your offerings to their needs. Want to streamline your supply chain? Track inventory levels and optimize delivery routes. With AWS, data becomes your secret weapon for business optimization.

So, why choose AWS for data analytics? It’s not just a cloud platform, it’s your data liberation hero. It’s the key to unlocking the hidden potential of your data, driving smarter decisions, and ultimately, achieving business success. Are you ready to embrace the cloud and become a data-driven champion?

Building Your AWS Data Analytics Foundation: Laying the Bricks for Insightful Decisions

Think of your data as a vast, untapped resource. To unlock its potential, you need a sturdy foundation, a platform upon which you can build your data-driven empire. In the world of AWS data analytics, this foundation hinges on two crucial choices: Data Lake vs. Data Warehouse.

- Data Lake: Embrace the Limitless Ocean of Raw Data:

Imagine a boundless ocean, teeming with every type of data imaginable. Structured, unstructured, semi-structured – it’s all welcome in the expansive embrace of a Data Lake. This is where you dump all your data, raw and unfiltered, preserving its original form for future exploration. Think of it as a digital Noah’s Ark, safeguarding every data species for potential future analysis.

Benefits of the Data Lake:

- Flexibility: No schema restrictions, so you can store anything and everything.

- Scalability: Easily accommodate massive data volumes without worrying about limitations.

- Future-proofing: Uncover hidden insights later, even if you haven’t defined them yet.

Ideal for:

- Data scientists and analysts exploring new possibilities.

- Businesses with diverse data sources and evolving needs.

- Organizations preparing for future AI and machine learning applications.

- Data Warehouse: The Structured Symphony of Insights:

But sometimes, raw data needs a conductor. Enter the Data Warehouse, a meticulously curated repository where your data is transformed, organized, and primed for analysis. Imagine a beautifully arranged orchestra, each instrument polished and ready to play its part in the grand symphony of insights.

Benefits of the Data Warehouse:

- Performance: Optimized for fast querying and reporting, ideal for business intelligence.

- Security and Governance: Access control and data quality measures ensure reliable analysis.

- Collaboration: Shared platform for data-driven decision making across departments.

Ideal for:

- Generating reports and dashboards for business users.

- Answering specific, predefined business questions.

- Supporting data-driven decision making across the organization.

Choosing the Right Storage Option:

The decision between Data Lake and Data Warehouse isn’t a binary choice. It’s all about finding the right balance for your specific needs. Many organizations utilize both, with the Data Lake serving as the raw data reservoir and the Data Warehouse as the refined analysis hub. Think of it as a pipeline: data flows from the Data Lake, undergoes transformation, and then settles into the Data Warehouse, ready to be analyzed and transformed into actionable insights.

- Taming the Big Data Beast: The Powerhouse Tools at Your Disposal:

Now that you have your storage options in place, it’s time to unleash the beast of big data. AWS offers a powerful arsenal of tools to tackle any data challenge:

- Amazon Redshift: This scalable data warehouse handles petabyte-scale analytics with ease, letting you query your data with lightning speed.

- Amazon EMR: Need to run big data jobs on Hadoop, Spark, or other frameworks? EMR provides a managed platform for distributed data processing.

- Amazon Kinesis: Real-time data is no match for Kinesis. This streaming service delivers continuous data feeds to your analytics pipelines and applications, giving you insights as they happen.

With these tools and the right storage foundation, you’ve laid the groundwork for a thriving data analytics ecosystem. In the next section, we’ll delve into the art of building and managing data pipelines, the vital arteries that transport your data to the analysis engine.

Building and Managing Data Pipelines: From Raw Data to Refined Insights

Imagine your data as a rushing river, flowing from various sources like sensors, databases, and applications. To unlock its power, you need a well-designed network of canals and aqueducts – your data pipelines. These pipelines efficiently transport your raw data, cleanse it, transform it, and deliver it to the thirsty fields of your analytics tools. Building and managing data pipelines efficiently is crucial for extracting timely and accurate insights from your data.

Enter the AWS arsenal of pipeline-building tools:

- Amazon Glue: The ETL/ELT Architect’s Dream:

Think of ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) as different ways to prepare your data for analysis. Amazon Glue simplifies both approaches. It acts as a visual drag-and-drop interface, allowing you to define data sources, transformations (like filtering, joining, and formatting), and target destinations for your data pipelines. Glue also integrates seamlessly with other AWS services, making it a one-stop shop for building and orchestrating data pipelines.

Benefits of Amazon Glue:

- Simplified pipeline creation: Drag-and-drop interface makes it easy for both technical and non-technical users.

- Pre-built connectors: Connect to various data sources with pre-built connectors for popular databases, cloud storage, and applications.

- Scalability and elasticity: Glue scales automatically to handle your data volume, making it ideal for dynamic workloads.

- AWS Data Pipeline: The Orchestrator for Complex Flows:

For intricate data journeys with multiple stages and dependencies, AWS Data Pipeline is your maestro. This service allows you to define complex pipelines using a visual workflow editor. You can schedule tasks, manage dependencies, and monitor pipeline execution in real-time. Data Pipeline integrates seamlessly with other AWS services like S3, Redshift, and Lambda, making it a powerful tool for orchestrating large-scale data processing workflows.

Benefits of AWS Data Pipeline:

- Visual workflow editor: Clearly define complex data pipelines with dependencies and scheduling.

- Scalability and reliability: Reliable execution even for large data volumes and complex workflows.

- Integration with other AWS services: Seamlessly connect your data pipelines with other AWS tools for a complete data analytics ecosystem.

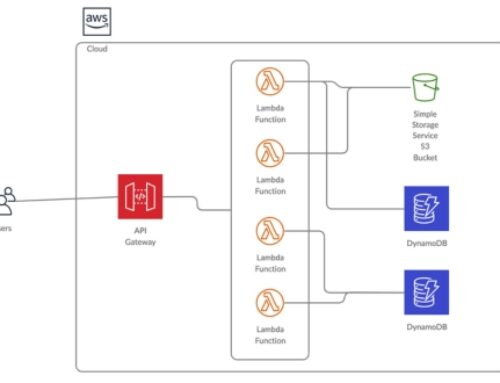

- Serverless Data Pipelines with AWS Lambda: The Cost-Effective Maestro:

Serverless computing has revolutionized the way we build applications, and data pipelines are no exception. AWS Lambda allows you to build and run code without managing servers, making it ideal for event-driven data processing. You can create Lambda functions that trigger data transformations based on specific events, like file uploads or API calls. This approach reduces infrastructure costs and simplifies pipeline maintenance.

Benefits of Serverless Data Pipelines with AWS Lambda:

- Cost-effective: Pay only for the resources used, reducing infrastructure costs.

- Event-driven processing: Trigger data transformations based on specific events, improving efficiency.

- Scalability and elasticity: Lambda scales automatically to handle your data volume, eliminating server management overhead.

Building and managing data pipelines is an iterative process. You’ll start by defining your data sources, transformations, and target destinations. Then, you’ll choose the right tool – Glue for visual simplicity, Data Pipeline for complex workflows, or Lambda for cost-effective event-driven processing. Once your pipeline is running, you’ll need to monitor its performance and troubleshoot any issues.

Here are some additional tips for building and managing data pipelines:

- Define clear data quality standards: Ensure your data is accurate and consistent before entering your analytics tools.

- Version control your pipelines: Track changes and revert to previous versions if needed.

- Monitor and automate pipeline execution: Use tools like CloudWatch to monitor pipeline performance and automate tasks like stopping and restarting pipelines.

- Secure your data pipelines: Implement access controls and encryption to protect your sensitive data.

By following these best practices and leveraging the powerful data pipeline tools offered by AWS, you can ensure that your data flows smoothly, transforming from raw inputs into actionable insights that drive your business forward.

Extracting Insights with Powerful Analytics Tools: Turning Data into Discovery

The data streams in, your pipelines have meticulously transformed it, now comes the thrilling part: extracting the secrets hidden within! In the realm of AWS data analytics, you have a treasure trove of tools at your disposal, each designed to unlock different facets of your data’s potential. Let’s delve into three powerful options:

- Amazon QuickSight: The Visual Storyteller:

Imagine transforming your data into a captivating tapestry, where numbers dance and charts sing. Amazon QuickSight is your artistic partner, allowing you to build interactive dashboards and visualizations that bring your data to life. Drag-and-drop functionality empowers both analysts and business users to explore data, identify trends, and share insights with stunning visuals.

QuickSight’s Magic:

- Interactive dashboards: Create dynamic dashboards with customizable charts, graphs, and maps for intuitive data exploration.

- Collaboration and sharing: Share dashboards and reports with colleagues and stakeholders, fostering data-driven decision making across the organization.

- Seamless integration: Connect QuickSight to various data sources, including your Data Lake, Redshift, and other AWS services, for a unified data experience.

- Amazon Athena: The SQL Sorcerer:

For those who speak the language of data, Athena is the magical wand that lets you cast spells on your data lake. This serverless service allows you to run familiar SQL queries directly on your raw data, without the need for ETL or data warehousing. Imagine the freedom of exploring petabytes of data with the power of familiar SQL commands – a data analyst’s dream come true.

Athena’s Enchantments:

- Direct data lake querying: Analyze your data where it lives, without the need for data movement or transformation.

- Standard SQL compatibility: Use familiar SQL syntax to query your data, making it accessible to analysts and data scientists alike.

- Cost-effective and scalable: Pay only for the queries you run, making Athena a cost-effective option for ad-hoc analysis.

- Amazon SageMaker: The Machine Learning Alchemist:

Ready to unlock the secrets hidden within the depths of your data? SageMaker is your alchemical cauldron, where you can brew potent machine learning models that extract hidden patterns and predict future outcomes. Build, train, and deploy machine learning models with ease, even if you’re not a data science expert. SageMaker empowers you to:

- Predict customer churn: Identify customers at risk of leaving and take proactive steps to retain them.

- Optimize marketing campaigns: Target your marketing efforts more effectively and increase ROI.

- Detecting fraud: Protect your business from fraudulent transactions with real-time anomaly detection.

SageMaker’s Concoctions:

- Pre-built algorithms and notebooks: Get started quickly with pre-built machine learning algorithms and notebooks, even without extensive machine learning expertise.

- Scalable and flexible: Train and deploy models on a variety of compute resources, from small instances to large clusters.

- Continuous integration and continuous delivery (CI/CD): Automate your machine learning workflow for efficient model development and deployment.

Choosing the Right Tool:

The choice between QuickSight, Athena, and SageMaker depends on your specific needs. For interactive data exploration and visualization, QuickSight is ideal. For ad-hoc analysis of raw data, Athena is your go-to tool. And for advanced analytics and prediction using machine learning, SageMaker is the magic ingredient.

These tools are not mutually exclusive. You can seamlessly combine them to create a powerful data analytics workflow. Use QuickSight to visualize the results of your Athena queries. Leverage the insights gained from QuickSight and Athena to build and refine your machine learning models in SageMaker. The possibilities are endless.

Real-Time Analytics: Riding the Data Wave, Decisions Made in the Now

In the fast-paced world of today, data isn’t just a static picture, it’s a dynamic river, flowing with insights that can make or break your business decisions. Enter the realm of real-time analytics, where data is analyzed and acted upon as it happens, giving you the agility to react to the now and seize the moment. AWS offers a potent cocktail of tools to quench your thirst for real-time insights:

- Amazon Kinesis Firehose: The Real-Time Data Fire Brigade:

Think of Kinesis Firehose as the emergency response team for your data. It rapidly captures and delivers streaming data from various sources like sensors, social media, and IoT devices, directly to your analytics pipelines and applications. No need for manual data ingestion or batch processing – Kinesis Firehose ensures your data arrives hot off the press, ready for immediate analysis.

Kinesis Firehose’s Firepower:

- Real-time data capture and delivery: Get insights from your data as it happens, without waiting for batch processing.

- Scalable and elastic: Handles data spikes with ease, ensuring you never miss a beat.

- Cost-effective: Pay only for the data you send, making it a budget-friendly solution for real-time analytics.

- Amazon CloudWatch Logs: Your Data Detective, Always on the Case:

While Kinesis Firehose delivers the data, CloudWatch Logs is your vigilant detective, monitoring your data and infrastructure in real-time. It collects and analyzes logs from your applications, servers, and other AWS resources, providing you with a comprehensive view of your system’s health and performance.

CloudWatch Logs’ Sherlockian Skills:

- Real-time log monitoring and analysis: Identify issues and opportunities as they happen, allowing for quick response and proactive measures.

- Customizable dashboards and alerts: Create personalized dashboards and set alerts to notify you of specific events or anomalies in your data.

- Integration with other AWS services: Seamlessly integrate CloudWatch Logs with other AWS services for a holistic view of your data and infrastructure.

- Amazon DynamoDB: The NoSQL Dynamo for High-Performance Applications:

When your real-time needs demand lightning speed, DynamoDB is the answer. This NoSQL database is built for performance and scalability, offering blazing-fast read and write speeds for mission-critical applications. Imagine accessing and updating your data with millisecond latency, enabling real-time decision making and responsiveness.

DynamoDB’s Speed and Power:

- High-performance NoSQL database: Handles massive data volumes with incredible speed and scalability.

- Globally distributed: Ensures low latency and high availability for your real-time applications.

- Flexible schema: Adapt your data model to your evolving needs without compromising performance.

Real-time analytics is not just a technology, it’s a mindset. By embracing the tools like Kinesis Firehose, CloudWatch Logs, and DynamoDB, you can transform your data into a real-time river of insights, propelling your business forward with the power of immediate decision-making.

Security and Governance: Building a Fortress for Your Data Assets

In the age of data, trust and security are paramount. Just as you wouldn’t leave your castle gates wide open, you must carefully protect your data assets. AWS offers a robust arsenal of tools to build a fortress for your data, ensuring its security and compliance with relevant regulations.

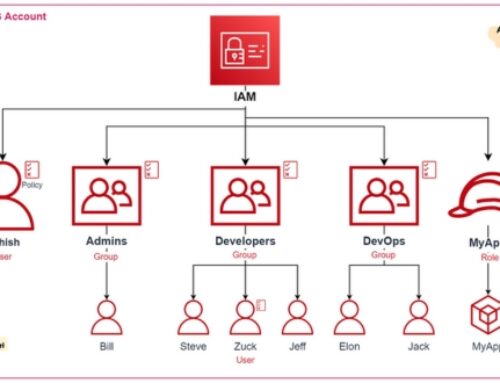

- AWS Identity and Access Management (IAM): Your Data Gatekeeper:

Imagine IAM as the vigilant guard at your data fortress gate. It defines who can access your data resources and what they can do with it. With granular control over permissions, you can grant different levels of access to different users, ensuring only authorized individuals can interact with your data.

IAMs Vigilance:

- Fine-grained access control: Assign specific permissions for different actions on specific data resources.

- Multi-factor authentication: Add an extra layer of security with multi-factor authentication to verify users’ identities.

- Auditing and logging: Track user activity and resource access for accountability and compliance.

- AWS CloudTrail: Your Data’s Watchful Eye:

Think of CloudTrail as the ever-vigilant eyes watching over your data fortress. It monitors and logs all API calls made to your AWS resources, providing you with a complete record of who accessed what, when, and from where. This audit trail is crucial for:

- Detecting suspicious activity: Identify potential security threats and unauthorized access attempts.

- Meeting compliance requirements: Demonstrate adherence to data privacy regulations and internal policies.

- Troubleshooting and debugging: Investigate issues and identify root causes of problems.

- AWS Key Management Service (KMS): The Keeper of Encryption Keys:

Just like a secure castle has hidden vaults, KMS safeguards your data with encryption. It centrally manages your encryption keys, ensuring only authorized individuals and applications can access them. This multi-layered security protects your data at rest and in transit, adding an extra layer of defense against unauthorized access and breaches.

KMS’s Encrypted Armor:

- Centralized key management: Securely store, manage, and rotate your encryption keys for all your AWS services.

- Data encryption at rest and in transit: Protect your data both when it’s stored and when it’s moving between systems.

- Compliance with security standards: Meet stringent data security regulations and industry best practices.

By implementing these security and governance practices, you can build a robust data fortress, earning the trust of your customers and stakeholders, and ensuring your data is used responsibly and ethically.

Getting Started with AWS Data Analytics: Unleashing the Power within Your Reach

Ready to unlock the transformative power of AWS data analytics? The journey begins with a single step, and AWS provides the launchpad you need to take that leap. Here are three key ways to kickstart your data-driven adventure:

- Explore and Experiment with the AWS Free Tier:

Dive headfirst into the world of AWS data analytics with the Free Tier. This generous offering grants you access to a vast array of services, including Amazon S3, Redshift, Athena, and QuickSight, allowing you to experiment and build basic data pipelines and analytics dashboards without breaking the bank. It’s the perfect playground to learn the ropes, test your ideas, and discover the potential that awaits.

- Leverage Pre-Built Solutions for Common Use Cases:

Don’t reinvent the wheel! AWS Solution Architectures offer pre-built blueprints for common data analytics scenarios, like customer churn analysis, marketing campaign optimization, and operational efficiency improvement. These blueprints provide a head start, giving you a framework to customize and build upon, saving you valuable time and resources.

- Upskill Your Team with Comprehensive Learning Resources:

Investing in knowledge is the most valuable asset you can acquire. AWS offers a wealth of training and certification programs designed to equip you and your team with the necessary skills to master AWS data analytics. From beginner-friendly courses to advanced certifications, there’s a learning path for every level, empowering your team to become data-driven heroes.

Remember, the journey to data-driven success is a continuous one. As you explore and learn, you’ll discover new possibilities, refine your approach, and unlock even greater value from your data. Embrace the learning curve, experiment, and don’t hesitate to seek help from the vast AWS community and support resources.

With these steps and the power of AWS data analytics at your fingertips, you’re on your way to transforming your business, one insightful decision at a time.

Conclusion: Transforming Your Business with AWS Data Analytics

In conclusion, the adoption of AWS Data Analytics has the transformative potential to revolutionize the way businesses derive insights from their data. The journey from raw data to informed decisions is streamlined through the power of cloud-based analytics on the AWS platform.

From Data to Decisions: The Power of Cloud-Based Insights:

AWS Data Analytics empowers organizations to extract meaningful insights from vast datasets. By leveraging scalable and cost-effective cloud resources, businesses can perform advanced analytics, uncover patterns, and gain a deeper understanding of their data. The agility and flexibility offered by AWS analytics services facilitate a quicker transition from data collection to actionable insights.

Embracing the Future of Data-Driven Success:

As businesses navigate the digital era, the ability to harness the full potential of their data becomes a key driver of success. AWS Data Analytics positions organizations to embrace this future by providing cutting-edge tools, services, and integrations. From real-time analytics to machine learning capabilities, AWS enables businesses to stay at the forefront of data-driven innovation, ensuring they are well-equipped for the challenges and opportunities that lie ahead. By embracing AWS Data Analytics, businesses can not only gain a competitive edge today but also future-proof their operations in an increasingly data-centric landscape.